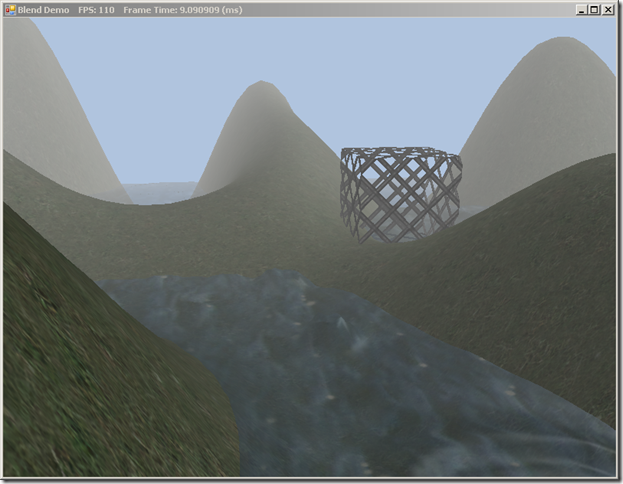

This time around, we are going to dig into Chapter 9 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0. We will be implementing the BlendDemo in the next couple of posts. We will base this demo off our previous example, the Textured Hills Demo. We will be adding transparency to our water mesh, a fog effect, and a box with a wire texture, with transparency implemented using pixel shader clipping. We’ll need to update our shader effect, Basic.fx, to support these additional effects, along with its C# wrapper class. We’ll also look at additional DirectX blend and rasterizer states, and build up a static class to manage our these for us, in the same fashion we used for our InputLayouts and Effects. The full code for this example can be found on GitHub at https://github.com/ericrrichards/dx11.git, under the BlendDemo project. Before we can get to the demo code, we need to cover some of the basics first, however.

Blending

So far, we have been been rendering only the closest pixel fragment to the camera, using the depth buffer. We have been implicitly using the default Direct3D blend state, which overwrites any existing pixel color with the newly calculated color. Direct3D allows us to modify this default behavior, however, to achieve different effects. DIrect3D uses the following equation to blend source and destination pixels:

c = c_source*f_source (operation) c_dest * f_dest

where c_source is the new pixel color, c_dest is the current pixel in the back buffer, and f_source and f_dest are the blend factors (a 4D vector describing each of the color channels of the color) for the source and destination pixels.

The previous equation is used to blend the RGB color components of the pixels. The alpha component of the pixel is blended using the following equation:

a = a_source*fa_source (operation) a_dest*fa_dest

In the previous equations, (operation) is a binary operation that we specify from the BlendOperation enumeration, which tells the graphics hardware how to calculate the final pixel value. These operations are:

- BlendOperation.Add – c_source*f_source + c_dest * f_dest

- BlendOperation.Subtract – c_dest * f_dest - c_source*f_source

- BlendOperation.ReverseSubtract – c_source*f_source - c_dest * f_dest

- BlendOperation.Maximum – max(c_source, c_dest)

- BlendOperation.Minimum – min(c_source, c_dest)

Direct3D specifies some of the most commonly used blend factors in the BlendOption enumeration. We’ll describe these options using f for the rgb blend factor and fa for the alpha blend factor. These are:

- BlendOption.Zero – f = (0,0,0), fa = 0.

- BlendOption.One – f = (1,1,1), fa = 1.

- BlendOption.SourceColor – f = (r_s, g_s, b_s)

- BlendOption.InverseSourceColor – f = (1 – r_s, 1 – g_s, 1 – b_s )

- BlendOption.SourceAlpha – f = (a_s, a_s, a_s), fa = a_s

- BlendOption.InverseSourceAlpha – f = (1 – a_s, 1 – a_s, 1 – a_s), fa = 1 – a_s

- BlendOption.DestinationAlpha – f = (a_d, a_d, a_d), fa = a_d

- BlendOption.InverseDestinationAlpha – f = (1 – a_d, 1 – a_d, 1 – a_d), fa = 1 – a_d

- BlendOption.DestinationColor – f = (r_d, g_d, b_d)

- BlendOption.InverseDestinationColor – f = (1 – r_d, 1 – g_d, 1 – b_d )

- BlendOption.SourceAlphaSaturate = f = (a_s’, a_s’, a_s’), fa = a_s’, where a_s’ = clamp(a_s, 0, 1)

You can also specify the blend factor arbitrarily, using the BlendOption.BlendFactor and BlendOption.InverseBlendFactor flags. Note that you can use any of these blend factors for the RGB blend stage, but you cannot use the Color blend factors (those above without an fa term) for alpha blending.

BlendState Structure

SlimDX exposes a BlendStateDescription class which wraps the D3D11_BLEND_DESC structure from raw Direct3D. We will create our BlendStates that we will use in the example by creating and filling out the BlendStateDescription, and then creating the BlendState using the BlendState.FromDescription(Device, BlendStateDescription) function.

The BlendStateDescription class exposes the following properties that we need to define:

- AlphaToCoverageEnable – True to enable Alpha-To-Coverage blending, which is a multi-sampling technique that requires multisampling to be enabling. We have not been using multisampling thus far (Enable4xMsaa in our D3DApp class), so we will specify false here. We will make use of this later, when we get to billboarding using the geometry shader.

- IndependantBlendEnable – Direct3D can render to up to 8 separate render targets at the same time. If we set this field to true, we can use different blend states for each render target. For now, we are only going to use one blend state, so we set this to false, and Direct3D will only use the blend state for the first render target.

- RenderTargets[8] – This array of RenderTargetBlendDescription structures allows us to specify the different blend settings if we have multiple render targets and IndependantBlendEnable is true. We will need to fill out at least the first element of this array.

RenderTargetBlendDescription Structure

The RenderTargetBlendDescription is where we specify the blend operations and blend factors for the blending equations above. We can specify the parameters for the RGB and Alpha blend operations separately. We can also apply a mask that will specify which color channels of the backbuffer to write to, so that we could modify just the red channel, for instance. The fields of the RenderTargetBlendDescription structure are:

- BlendEnable – true to use blending, false to disable blending.

- SourceBlend – One of the BlendOption elements, to control the source pixel blend factor in the RGB blending equation.

- DestinationBlend – One of the BlendOption elements, to control the destination pixel blend factor in the RGB blending equation.

- BlendOperation – One of the BlendOperation elements, to select the operation in the RGB blending equation.

- SourceBlendAlpha - One of the BlendOption elements, to control the source alpha blend factor in the alpha blending equation.

- DestinationBlendAlpha - One of the BlendOption elements, to control the destination alpha blend factor in the alpha blending equation.

- BlendOperationAlpha – One of the BlendOperation elements, to select the operation in the alpha blending equation.

- RenderTargetWriteMask – a combination of the ColorWriteMaskFlags, which are {None, All, Red, Green, Blue}, corresponding to the different color channels of the backbuffer.

Using a BlendState

Once we have created a BlendState object, we need to set our ImmediateContext to use it. In C++ Dirext3D, we can do this in one function call, but in SlimDX, we need to set three properties of our ImmediateContext.OutputMerger.

var blendFactor = new Color4(0,0,0,0); ImmediateContext.OutputMerger.BlendState = RenderStates.TransparentBS; ImmediateContext.OutputMerger.BlendFactor = blendFactor; ImmediateContext.OutputMerger.BlendSampleMask = (int) ~0; // or -1

First, we need to set the BlendState property to our newly created BlendState instance. Next, we need to supply a BlendFactor. If we were using BlendOptions.BlendFactor or BlendOption.InverseBlendFactor, this is where we would supply the custom blend factor. If we are using one of the predefined blend factors, we can just supply a null (all-black, 0 alpha) color. Lastly, we need to set the BlendSampleMask, which controls multisampling. If we wanted to ignore a particular multisample, we would zero the bit for the multisample we wished to disable. I’m not entirely sure that this is correct, as for whatever reason, SlimDX uses an int, rather than a uint, as in the C++ Direct3D library, so simply using 0xffffffff (to enable all samples) will not compile, but taking the complement of 0 should be the same bit representation.

Alpha Channels

If you have used an image editing program like Photoshop or Paint.NET, you are probably familiar with alpha channels. Typically, the alpha channel is used to control the transparency of a pixel in an image, with white representing fully opaque and black representing completely transparent. In our pixel shader, we will use the combination of the object material alpha value and the object’s texture alpha value to determine transparency in our pixel shader. We can use this, for instance, to render a picket fence by simply drawing a quad and applying an alpha-channeled texture of the fence, which will render the gaps in the fence transparent and show the scene behind. In our demo, we will use this to draw the wire box at the center of the scene in the screenshot above.

Pixel Clipping

Alpha blending is more expensive to calculate on the GPU than simply rendering a non-blended pixel. If we know that a pixel is going to be completely transparent, we don’t want to bother calculating the blend equations. HLSL lets us do this by using the clip() intrinsic function, which will cause the pixel shader to return early if the value passed is less than 0. In the example of the previous paragraph, if we sample our picket fence texture and the alpha channel for the texel is within some buffer of 0 (to account for texture filtering), we can skip it and save the calculations.

Fog

In the real world, we usually can’t see clearly all the way to whatever objects we see in the distance. Usually, there is some sort of haze that blurs distant objects. Modeling this in a physically realistic manner would be very difficult and computationally expensive, but we can apply a reasonable hack to emulate this phenomenon. This is typically called Distance Fog. If we supply a color for this fog effect, a threshold range to start showing fog, and a range at which the fog will completely obscure objects, we can apply this effect fairly easily, by linearly interpolating between the normal lit color of the scene and the fog color, based on the distance into the “fog zone” the object is from the viewpoint. You can see this effect at work in the screenshot above, as the nearest slopes of the hill are drawn at full brightness, whereas the more distant hills are progressively grayed out.

Next Time…

We’ll move onto the actual implementation of our BlendDemo. We’ll setup some new renderstates and build a static manager class to contain them. We’ll modify our shader effect to support blending, clipping, and fog. Then we’ll set up the necessary textures and materials to render some partially transparent water, a wire box, and add the fog effect to our scene.

No comments :

Post a Comment