One of the main bottlenecks to the speed of a Direct3D application is the number of Draw calls that are issued to the GPU, along with the overhead of switching shader constants for each object that is drawn. Today, we are going to look at two methods of optimizing our drawing code. Hardware instancing allows us to minimize the overhead of drawing identical geometry in our scene, by batching the draw calls for our objects and utilizing per-instance data to avoid the overhead in uploading our per-object world matrices. Frustum culling enables us to determine which objects will be seen by our camera, and to skip the Draw calls for objects that will be clipped by the GPU during projection. Together, the two techniques reap a significant increase in frame rate.

The source code for this example was adapted from the InstancingAndCulling demo from Chapter 15 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0. Additionally, the frustum culling code for this example was adapted from Chapter 5 of Carl Granberg’s Programming an RTS Game with Direct3D (Luna’s implementation of frustum culling relied heavily on xnacollision.h, which isn’t really included in the base SlimDX). You can download the full source for this example from my GitHub repository at https://github.com/ericrrichards/dx11.git under the InstancingAndCulling project.

Hardware Instancing

While we have been able to re-use geometry that is the same in our scenes by utilizing a single vertex buffer and individual world matrices (See the Shapes Demo), drawing an instance of the geometry still requires that we issue a draw call for each object, along with submitting a constant buffer for each object to the GPU. Drawing a large number of identical objects in this way becomes dependant on the through-put of the CPU and the memory bus between the CPU and GPU. Since Direct3D 10, we are able to take advantage of a feature of newer graphics cards called hardware instancing. This enables us to use two vertex buffers, one containing the vertex data for the object we wish to draw, which remains the same for all instances, and a second buffer containing the per-instance data, which varies per instance of the geometry that we draw.

To illustrate this we will create a new version of our BasicEffect.fx shader, InstancedBasic.fx. First off, you will notice that our cbPerObject constant buffer no longer contains the gWorld and gWorldInverseTranspose matrices. Instead, we have added a world matrix to our VertexIn structure instead.

cbuffer cbPerObject

{

float4x4 gViewProj;

float4x4 gTexTransform;

Material gMaterial;

};

struct VertexIn

{

float3 PosL : POSITION;

float3 NormalL : NORMAL;

float2 Tex : TEXCOORD;

row_major float4x4 World : WORLD;

float4 Color : COLOR;

uint InstanceId : SV_InstanceID;

};

The row_major modifier on World is significant. Apparently, HLSL defaults to using column-major matrices, whereas DirectX uses row-major matrices. The Effects Framework, (which is wrapped up by the SlimDX Effect class we have been using) automatically transposes matrices from one form to the other, but for our vertex data, where we are going to be essentially blitting data to a vertex buffer without the Effects Framework, specifying the order saves us having to do the transpose ourselves.

You’ll also note that we have added a Color member, and an InstanceId member. The Color member will simply allow us to render each instance with a different color, so that we can tell them apart more easily. The InstanceId member is automatically generated by the GPU; starting from 0, this is incremented for each instance of the geometry that we draw.

With the new shader vertex structure, we need to define a new InputLayout to match, in our InputLayouts class. The InputElement array that we use to create this InputLayout is as follows (from Core.InputLayoutDescriptions):

public static readonly InputElement[] InstancedBasic32 = {

new InputElement("POSITION", 0, Format.R32G32B32_Float, 0, 0, InputClassification.PerVertexData, 0),

new InputElement("NORMAL", 0, Format.R32G32B32_Float, InputElement.AppendAligned, 0, InputClassification.PerVertexData, 0),

new InputElement("TEXCOORD", 0, Format.R32G32_Float, InputElement.AppendAligned, 0, InputClassification.PerVertexData, 0),

new InputElement("WORLD", 0, Format.R32G32B32A32_Float, 0, 1, InputClassification.PerInstanceData, 1 ),

new InputElement("WORLD", 1, Format.R32G32B32A32_Float, InputElement.AppendAligned, 1, InputClassification.PerInstanceData, 1 ),

new InputElement("WORLD", 2, Format.R32G32B32A32_Float, InputElement.AppendAligned, 1, InputClassification.PerInstanceData, 1 ),

new InputElement("WORLD", 3, Format.R32G32B32A32_Float, InputElement.AppendAligned, 1, InputClassification.PerInstanceData, 1 ),

new InputElement("COLOR", 0, Format.R32G32B32A32_Float, InputElement.AppendAligned, 1, InputClassification.PerInstanceData, 1 )

};

Before examining the new Input Elements we have defined for the InputLayout, let’s review the InputElement constructor (see the InputElement Constructor documentation). The parameters, in order, are:

- string name – This is the HLSL shader semantic of the element defined for the vertex shader input structure.

- int index – this enables us to determine which element with the previous semantic this InputElement refers to. In the case of our float4x4 World matrix, we can only set one row of the matrix per InputElement, so index 0 refers to the first row, index 1 to the second, and so forth.

- Format format – the data type of the element, from the Format enumeration.

- int offset – The offset in bytes from the start of the structure for this element. We can use InputElement.AppendAligned to automatically calculate this for us when using sequential elements.

- int slot – this indicates which vertex buffer bound to the InputAssembler the data should be drawn from. This value must be in the range [0, 15] – up to 16 vertex buffers are supported.

- InputClassification slotClass – One of either InputClassification.PerInstanceData or InputClassification.PerVertexData. PerVertexData indicates that each vertex will have a distinct value for this element, while PerInstanceData indicates that all vertices of an instance will share this element data.

- int stepRate – This value indicates the number of instances that will share the per-instance data. For per-vertex elements, we must specify 0 here. For per-instance data, we will typically use 1, that is, each instance will have a copy of the per-instance data.

Here, we have the same first three elements as with our Basic32 vertex structure (See the Crate Demo). After that, come the elements for the World and Color members of our VertexIn structure, which are per-instance. We are going to use two vertex buffers, so the normal geometry is assigned to slot 0, while the per-instance data is assigned to slot 1.

Creating the InputLayout in our InputLayouts.InitAll() function follows the same pattern as we have been using previously. Note that we need to be sure to use the effect signature of a technique from our new InstancedBasicFX effect wrapper class, rather than the BasicFX class. I won’t go into much detail on the InstancedBasicFX class, as it is very similar to our previous effect wrappers; just handles to the different shader techniques and variables.

try { var signature = Effects.InstancedBasicFX.Light1Tech.GetPassByIndex(0).Description.Signature; InstancedBasic32 = new InputLayout(device, signature, InputLayoutDescriptions.InstancedBasic32); } catch (Exception dex) { Console.WriteLine(dex.Message); InstancedBasic32 = null; }

Creating the Instance Buffer

As I have mentioned, we will be using two vertex buffers to contain: A.) a single copy of our base, per-vertex geometry, in this case, our old friend the skull mesh, and B) the per-instance world matrices and colors. For A, we can continue to use our Basic32 vertex structure, but for B, we need to define a new structure to contain this data.

struct InstancedData {

public Matrix World;

public Color4 Color;

}

We’ll create the vertex buffer for this data in our application’s Init() method, using a new helper function, BuildInstancedBuffer. For our demo, we are going to render a 5x5x5 cube of skull models. With each skull model containing over 60,000 triangles, we would be hard pressed to draw 125 instances separately at all, let alone with an acceptable frame rate. Creating the index buffer itself should be familiar by now, though you should note that we create the buffer as a dynamic buffer, with CPU write access, as we will be altering the buffer for our frustum culling code. We also cache a memory copy of the instance data in the _instancedData list, despite the memory duplication, as it is more efficient to operate on the in-memory copy and write the data to the GPU than to read the data back from the GPU vertex buffer when we want to modify the positions of the instances.

private void BuildInstancedBuffer() { const int n = 5; var width = 200.0f; var height = 200.0f; var depth = 200.0f; var x = -0.5f*width; var y = -0.5f*height; var z = -0.5f*depth; var dx = width/(n - 1); var dy = height/(n - 1); var dz = depth/(n - 1); _instancedData = new List<InstancedData>(); for (int k = 0; k< n; k++) { for (int i = 0; i < n; i++) { for (int j = 0; j < n; j++) { _instancedData.Add(new InstancedData() { World = Matrix.Translation(x+j*dx, y+i*dy, z+k*dz), Color = new Color4(MathF.Rand(0,1), MathF.Rand(0,1), MathF.Rand(0,1)) }); } } } var vbd = new BufferDescription( Marshal.SizeOf(typeof(InstancedData)) * n * n * n, ResourceUsage.Dynamic, BindFlags.VertexBuffer, CpuAccessFlags.Write, ResourceOptionFlags.None, 0 ); _instanceBuffer = new Buffer(Device, new DataStream(_instancedData.ToArray(), false, true), vbd); }

To draw the skull models using the instanced data, we modify our typical draw sequence slightly. We need to bind both vertex buffers to the ImmediateContext.InputAssembler using SetVertexBuffers(). We also need to use the ImmediateContext.DrawIndexedInstanced() method, instead of the ImmediateContext.DrawIndexed() method. The DrawIndexedInstanced() method takes the following parameters:

- int indexCountPerInstance – the number of indices in our index buffer. This will be the same as we have previously used.

- int instanceCount – The number of instances to draw.

- int startIndexCount – The index of the index buffer to start drawing from. This will be 0, unless we have combined multiple pieces of geometry into a single index buffer.

- int baseIndexVertexLocation – Again, if we concatenated multiple objects into a single vertex buffer, we would need to supply the start vertex index for the piece of geometry we are drawing.

- int startInstanceLocation – If we have concatenated multiple instance buffers, we can use this to set the start position in the instance buffer for the piece of geometry we are rendering.

public override void DrawScene() { base.DrawScene(); ImmediateContext.ClearRenderTargetView(RenderTargetView, Color.Silver); ImmediateContext.ClearDepthStencilView( DepthStencilView, DepthStencilClearFlags.Depth|DepthStencilClearFlags.Stencil, 1.0f, 0 ); ImmediateContext.InputAssembler.InputLayout = InputLayouts.InstancedBasic32; ImmediateContext.InputAssembler.PrimitiveTopology = PrimitiveTopology.TriangleList; var stride = new[] {Basic32.Stride, Marshal.SizeOf(typeof (InstancedData))}; var offset = new[] {0, 0}; var viewProj = _cam.ViewProj; Effects.InstancedBasicFX.SetDirLights(_dirLights); Effects.InstancedBasicFX.SetEyePosW(_cam.Position); var activeTech = Effects.InstancedBasicFX.Light3Tech; for (int p = 0; p < activeTech.Description.PassCount; p++) { ImmediateContext.InputAssembler.SetVertexBuffers( 0, new VertexBufferBinding(_skullVB, stride[0], offset[0]), new VertexBufferBinding(_instanceBuffer, stride[1], offset[1]) ); ImmediateContext.InputAssembler.SetIndexBuffer(_skullIB, Format.R32_UInt, 0); Effects.InstancedBasicFX.SetViewProj(viewProj); Effects.InstancedBasicFX.SetMaterial(_skullMat); activeTech.GetPassByIndex(p).Apply(ImmediateContext); ImmediateContext.DrawIndexedInstanced(_skullIndexCount, _visibleObjectCount, 0, 0, 0); } SwapChain.Present(0, PresentFlags.None); }

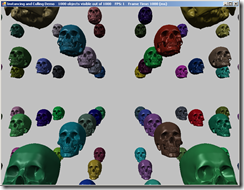

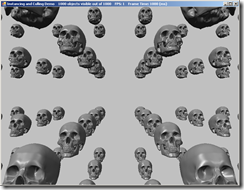

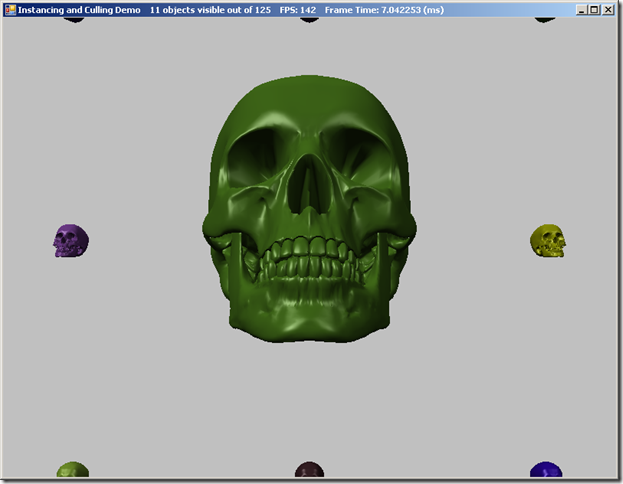

The results of using instancing are very interesting. At least on the hardware I am currently using (an older junk integrated graphics chipset), instancing was actually slower than using individual draw calls when rendering the high-poly skull mesh. Switching to a much simpler cube mesh with only 12 polygons, however, the instanced method was orders of magnitude faster than the non-instanced version. You can see the results in the table below. I bumped up the number of instances to 1000 (10x10x10 grid), to amplify the effect; the frametime for the skull screenshots is from the command-line output, as the time was greater than the 1 second maximum that the in-application frame timer can handle.

| Instanced | Non-Instanced | |

| Skull |  Frame Time: 1850 msec |

Frame Time: 1450 msec |

| Box |  Frame Time: 2.7 msec |

Frame Time: 62.5 msec |

From this, I think we can infer that if you are going to use instancing, it pays to use low-poly models. This stackoverflow discussion raises some interesting points.

However, you may have noticed that, while we are drawing 1000 objects in these examples, we can only see a small subset of all those objects. The GPU culls these invisible objects for us, but only after they have gone through the bulk of the rendering pipeline. Filtering out the invisible objects before they are submitted to the GPU is the idea behind the technique of Frustum Culling.

Frustum Culling

Our 3D virtual camera sees a volume in our 3D scene defined by the view/projection matrix. This can be represented by the geometric solid known as a frustum, which resembles a pyramid with the top chopped off. SlimDX does not feature a frustum class that we can use (at least in the latest versions; there appears to have once been a BoundingFrustum class which has been removed), thus we’ll need to write our own. This is fairly simple; we can effectively represent a frustum using six inward-facing planes. The following frustum implementation is based on Chapter 5 of Carl Granberg’s Programming an RTS Game with Direct3D. It is possibly less performant than the method Mr. Luna presents, but as that method is highly dependant on an XNAMath based collision library that I found more difficult to port to C# than Mr. Granberg’s method, I used that instead.

Our frustum class is very simple. It consists of an array of six SlimDX planes. One constructs a Frustum by passing in a view/projection matrix which defines the space visible to the camera.

public class Frustum { private readonly Plane[] _frustum; public Frustum(Matrix vp) { _frustum = new[] { new Plane(vp.M14 + vp.M11, vp.M24 + vp.M21, vp.M34 + vp.M31, vp.M44 + vp.M41), new Plane(vp.M14 - vp.M11, vp.M24 - vp.M21, vp.M34 - vp.M31, vp.M44 - vp.M41), new Plane(vp.M14 - vp.M12, vp.M24 - vp.M22, vp.M34 - vp.M32, vp.M44 - vp.M42), new Plane(vp.M14 + vp.M12, vp.M24 + vp.M22, vp.M34 + vp.M32, vp.M44 + vp.M42), new Plane(vp.M13, vp.M23, vp.M33, vp.M43), new Plane(vp.M14 - vp.M13, vp.M24 - vp.M23, vp.M34 - vp.M33, vp.M44 - vp.M43) }; foreach (var plane in _frustum) { plane.Normalize(); } } public static Frustum FromViewProj(Matrix vp) { var ret = new Frustum(vp); return ret; } // ...snip... }

To study the math involved in creating these planes from the view/projection matrix, I would advise you to pick up a copy of Mr. Granberg’s book (it’s an excellent read for many reasons; I plan on doing more tutorials drawn from it after I finish Mr. Luna’s book), although it is now out of print and goes for an exorbitant price on Amazon; this blog article also describes the technique.

Once we have created a Frustum, we need to check each object in our scene to see if it intersects with the frustum (for a real game, you would probably want to use a more advanced space-partitioning algorithm, but checking each object works for our demo.). Since our skull mesh is very high-poly, we don’t want to perform a per-triangle intersection test, as it would be very expensive. There are four commonly used techniques for approximating the volume of a mesh for faster intersection tests:

- Aligned Bounding Boxes – For each object, define a box that completely encloses the object, with the box edges aligned to the world X, Y and Z axes. This is the fastest technique, as we only need to store two of the opposing corners of the box, and we can use the dot product of the frustum planes with these corner points to determine if the box is in front or behind the plane. The cons of this approach is that an aligned bounding box can be considerably larger than the contained mesh, depending on the mesh geometry and its orientation. Imagine the bounding box of a giant tarantula at a 45 degree angle to the world axes; most of the bounding box will consist of the negative space of the mesh.

- Oriented Bounding Boxes – Similar to aligned bounding boxes, except that we also store a rotation for the box to match the object’s orientation. This means that the oriented bounding box will typically occupy less volume than an aligned bounding box, although we need to perform an additional transformation to either move the box or the intersecting volume into the same coordinate system.

- Bounding Spheres – We represent the mesh volume using a center point and a radius to define a sphere which completely encloses the mesh. Depending on the mesh geometry, this can be more effective, if the mesh is more spherical than boxy.

- Simplified Meshes – We can also use a simplified, lower-polygon mesh to approximate the volume of the mesh. This is more complicated than the other methods; we will need to either have an artist define the low-poly model or generate it using a simplification algorithm, and then intersect each polygon in the simplified mesh.

For frustum culling, using an aligned bounding box is usually the best mix of speed and complexity. The accuracy gains from the more exact methods of volume approximation in many cases will not outweigh the additional cost of the intersection tests; rendering an extra handful of objects that will be clipped by the GPU is usually not a big deal. Additionally, SlimDX comes with an aligned bounding box class, BoundingBox, with most of the operations we will need already defined.

Using the SlimDX Plane and BoundingBox classes, testing the frustum for intersection with a bounding box becomes very simple.

// Return values: 0 = no intersection, // 1 = intersection, // 2 = box is completely inside frustum public int Intersect(BoundingBox box) { var totalIn = 0; foreach (var plane in _frustum) { var intersection = Plane.Intersects(plane, box); if (intersection == PlaneIntersectionType.Back) return 0; if (intersection == PlaneIntersectionType.Front) { totalIn++; } } if (totalIn == 6) { return 2; } return 1; }

Next, we just need to add a Frustum to our Camera class, and make sure that we update it when the view matrix changes, like so:

_frustum = Frustum.FromViewProj(ViewProj);

Creating a BoundingBox for the Skull Mesh

The simplest way to create a bounding box is to loop the vertices of a mesh and select the largest and smallest [X,Y,Z] components. These will define the minimum size that the bounding box must be, in object-space. We create the bounding box for our skull mesh in our BuildSkullGeometryBuffers() method, as we are loading in the mesh data from file. We can use the SlimDX Vector3.Maximize and Minimize methods to select the greatest and smallest components of the position component of each vertex.

private void BuildSkullGeometryBuffers() { try { var min = new Vector3(float.MaxValue, float.MaxValue, float.MaxValue); var max = new Vector3(float.MinValue, float.MinValue, float.MinValue); var vertices = new List<Basic32>(); var indices = new List<int>(); var vcount = 0; var tcount = 0; using (var reader = new StreamReader("Models\\skull.txt")) { // skip extraneous loading steps // Get the vertices for (int i = 0; i < vcount; i++) { input = reader.ReadLine(); if (input != null) { var vals = input.Split(new[] { ' ' }); var position = new Vector3(Convert.ToSingle(vals[0].Trim()), Convert.ToSingle(vals[1].Trim()), Convert.ToSingle(vals[2].Trim())); // snip... min = Vector3.Minimize(min, position); max = Vector3.Maximize(max, position); } } _skullBox = new BoundingBox(min, max);

Combining Culling and Instancing

We are going to combine frustum culling and instancing to draw our scene as efficiently as possible. We will do this by looping over the in-memory copy of our instance data, transforming the bounding box for our skull mesh to the world position of the instance, and testing the transformed bounding box against the camera frustum. If the object is visible, we write the instance data to our instance buffer, and increment our count of visible objects, which we will later use to draw the correct number of visible instances. We provide the option to switch between culling and non-culling, so that one can observe the difference in frame time between the two methods. We also append the number of visible objects to the application title bar. One can see this difference in the top image, using culling, and the bottom, with culling disabled.

_visibleObjectCount = 0; if (_frustumCullingEnabled) { var db = ImmediateContext.MapSubresource(_instanceBuffer, MapMode.WriteDiscard, MapFlags.None); foreach (var instancedData in _instancedData) { var w = instancedData.World; var box = new BoundingBox( Vector3.TransformCoordinate(_skullBox.Minimum, w), Vector3.TransformCoordinate(_skullBox.Maximum, w) ); if (_cam.Visible(box)) { db.Data.Write(instancedData); _visibleObjectCount++; } } ImmediateContext.UnmapSubresource(_instanceBuffer, 0); } else { var db = ImmediateContext.MapSubresource(_instanceBuffer, MapMode.WriteDiscard, MapFlags.None); foreach (var instancedData in _instancedData) { db.Data.Write(instancedData); _visibleObjectCount++; } ImmediateContext.UnmapSubresource(_instanceBuffer, 0); }

Next Time…

Next up, we’ll take a brief hiatus from Mr. Luna’s examples, and implement a new type of camera class, a LookAt camera, which is commonly found in strategy/simulation games. This will be similar to the camera in our earlier examples, but extracted out and following the pattern of our FPS camera. We’ll also pull out a base camera class, so that we can interact with both camera types using a common interface.

This was a great article, thank you. Just wanted to add that you can greatly increase your performance by changing the plane order to test near & far planes first.

ReplyDelete- ex: near, far, left, right, top, bottom

Changing the order doubled my FPS (from ~32 to ~65)

Thanks, glad you found it useful.

DeleteThanks for the tip, I'll give it a try. If you want to send me your name and anything you'd like me to link to, I'll credit you when I update this post.