So far, we have either worked with procedurally generated meshes, like our boxes and cylinders, or loaded very simple text-based mesh formats. For any kind of real application, however, we will need to have the capability to load meshes created by artists using 3D modeling and animation programs, like Blender or 3DS Max. There are a number of potential solutions to this problem; we could write an importer to read the specific file format of the program we are most likely to use for our engine. This does present a new host of problems, however: unless we write another importer, we are limited to just using models created with that modeling software, or we will have to rely on or create plugins to our modeling software to reformat models created using different software. There is a myriad of different 3D model formats in more-or-less common use, some of which are open-source, some of which are proprietary; many of both are surprisingly hard to find good, authoritative documentation on. All this makes the prospects of creating a general-purpose, cross-format model importer a daunting task.

Fortunately, there exists an open-source library with support for loading most of the commonly used model formats, ASSIMP, or Open Asset Import Library. Although Assimp is written in C++, there exists a port for .NET, AssimpNet, which we will be able to use directly in our code, without the hassle of wrapping the native Assimp library ourselves. I am not a lawyer, but it looks like both the Assimp and AssimpNet have very permissive licenses that should allow one to use them in any kind of hobby or professional project.

While we can use Assimp to load our model data, we will need to create our own C# model class to manage and render the model. For that, I will be following the example of Chapter 23 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0. This example will not be a straight conversion of his example code, since I will be ditching the m3d model format which he uses, and instead loading models from the standard old Microsoft DirectX X format. The models I will be using come from the example code for Chapter 4 of Carl Granberg’s Programming an RTS Game with Direct3D, although you may use any of the supported Assimp model formats if you want to use other meshes instead. The full source for this example can be found on my GitHub repository, at https://github.com/ericrrichards/dx11.git, under the AssimpModel project.

Representing Mesh Geometry

We will need to create a C# class to maintain and render our model’s geometry. In previous versions of DirectX, there existed an ID3DXMesh interface which fulfilled this task, built into the D3DX DirectX library; however, since DirectX10, the D3DX library has been deprecated and removed. Fortunately, the functionality that we require is relatively simple to implement. Our MeshGeometry class maintains the following data:

- A vertex buffer for the entire mesh

- An index buffer for the entire mesh

- A subset table – Models can be composed of different sections, which should be rendered with different materials or textures. To render a subset correctly, we need to store the first indices of the subset in both the vertex and index buffers, and the number of vertices and indices that comprise the subset.

- The vertex stride of the vertices in the vertex buffer.

Because we will need to manage DirectX resources, we will derive our MeshGeometry class from our DisposableClass base type and override the Dispose method to clean up those resources. The declaration of our MeshGeometry class is below:

public class MeshGeometry : DisposableClass {

public class Subset {

public int VertexStart;

public int VertexCount;

public int FaceStart;

public int FaceCount;

}

private Buffer _vb;

private Buffer _ib;

private int _vertexStride;

private List<Subset> _subsetTable;

private bool _disposed;

protected override void Dispose(bool disposing) {

if (!_disposed) {

if (disposing) {

Util.ReleaseCom(ref _vb);

Util.ReleaseCom(ref _ib);

}

_disposed = true;

}

base.Dispose(disposing);

}

}

To create the vertex and index buffers, and set the subset table, we will provide the following functions. Creating the buffers should look fairly familiar by now, and setting the subsets is trivial. We will use a generic vertex type, which will allow us to create models using different effects, i.e. we can create a model with normal or displacement mapping by using Vertex.PosNormalTexTan, or simple lit, textures models using Vertex.Basic32. We also need to calculate the vertex stride when we create the vertex buffer.

One limitation of this code is that it will only support models with < 32,767 vertices, since we are using short indices. For most games, we tend to use relatively low-poly models anyway, but if you are using more detailed models, you will want to change the code to use ints or longs for indices instead.

public void SetVertices<TVertexType>(Device device, List<TVertexType> vertices) where TVertexType : struct {

Util.ReleaseCom(ref _vb);

_vertexStride = Marshal.SizeOf(typeof (TVertexType));

var vbd = new BufferDescription(

_vertexStride*vertices.Count,

ResourceUsage.Immutable,

BindFlags.VertexBuffer,

CpuAccessFlags.None,

ResourceOptionFlags.None,

0

);

_vb = new Buffer(device, new DataStream(vertices.ToArray(), false, false), vbd);

}

public void SetIndices(Device device, List<short> indices) {

var ibd = new BufferDescription(

sizeof (short)*indices.Count,

ResourceUsage.Immutable,

BindFlags.IndexBuffer,

CpuAccessFlags.None,

ResourceOptionFlags.None,

0

);

_ib = new Buffer(device, new DataStream(indices.ToArray(), false, false), ibd);

}

public void SetSubsetTable(List<Subset> subsets) {

_subsetTable = subsets;

}

Drawing the MeshGeometry is relatively simple as well. We simply set the vertex and index buffer of the passed-in DeviceContext to the MeshGeometry’s buffers, then draw the desired subset using a DrawIndexed call and the subset table data for the subset. The MeshGeometry does not know about any shader effect variables or state, it simply draws the geometry; any textures or transformations will need to be set before calling MeshGeometry.Draw().

public void Draw(DeviceContext dc, int subsetId) {

const int offset = 0;

dc.InputAssembler.SetVertexBuffers(0, new VertexBufferBinding(_vb, _vertexStride, offset));

dc.InputAssembler.SetIndexBuffer(_ib, Format.R16_UInt, 0);

dc.DrawIndexed(_subsetTable[subsetId].FaceCount*3, _subsetTable[subsetId].FaceStart*3, 0);

}

BasicModel Class

A 3D model is more than just geometry data. We will also need to manage the materials and textures, perform intersection tests against the model, and other operations. Therefore, we will create a model class, named BasicModel (so called because we’ll develop other, more complicated model classes later), which will contain the MeshGeometry and the other associated mesh data. This data will consist of:

- A copy of the MeshGeometry subset table – We will want to query the number of subsets present in the mesh, and at present, our MeshGeometry class does not provide access to its subset table. Additionally, we will need to create a subset table in the BasicModel class anyway, when we load the model file, so we will simply cache this table after creating it.

- An in-memory copy of the vertices for the MeshGeometry – Again, we will be generating this data when we load the mesh, and it can be handy if we want to implement fine-grain hit testing for collisions or picking. We will only be supporting our PosNormalTexTan vertex structure, for our NormalMap and DisplacementMap effects here; we can disable normal/displacement mapping by setting the normal map texture in the effect to null to approximate the functionality of our BasicEffect, if desired.

- An in-memory copy of the index data for the MeshGeometry.

- The MeshGeometry itself.

- A list containing the Material for each subset in the model.

- A list containing the diffuse texture for each subset in the model.

- A list containing the normal map texture for each subset in the model. Not all models will have normal maps, so subsets without normal maps will be represented by nulls.

- A BoundingBox that represents the min/max extents of the model mesh.

Because the BasicModel contains a MeshGeometry instance, which contains unmanaged resources, we will again subclass our DisposableClass, and override the Dispose() method to clean up these resources. The textures will be handled somewhat differently, as we will soon see.

public class BasicModel : DisposableClass {

private bool _disposed;

private readonly List<MeshGeometry.Subset> _subsets;

private readonly List<PosNormalTexTan> _vertices;

private readonly List<short> _indices;

private MeshGeometry _modelMesh;

public List<Material> Materials { get; private set; }

public List<ShaderResourceView> DiffuseMapSRV { get; private set; }

public List<ShaderResourceView> NormalMapSRV { get; private set; }

public BoundingBox BoundingBox { get; private set; }

public MeshGeometry ModelMesh { get { return _modelMesh; } }

public int SubsetCount { get { return _subsets.Count; } }

protected override void Dispose(bool disposing) {

if (!_disposed) {

if (disposing) {

Util.ReleaseCom(ref _modelMesh);

}

_disposed = true;

}

base.Dispose(disposing);

}

}

Creating a BasicModel

To create a BasicModel, we pass in our DirectX11 device, the filename of the model file to load, and a path to the directory we are storing the model textures in. Using a separate path for the textures allows us some more flexibility in how we setup our application’s file system; most model exporters assume that the model textures are exported to the same directory as the model file, but we may wish to group our art assets by type instead of lumping them all together. We also need to pass in an instance of a new class, TextureManager, which we will implement shortly.

Our first step is to initialize all of our member containers, to prepare them for adding the imported model data. Next, we create an instance of the AssimpNet AssimpImporter class. This class does all the magic of loading the model file and extracting the model data into a format that we can access. Before we load the model file, using ImportFile(), we check to see if the model file is of a format supported by Assimp. If so, we continue on to import the model file. Assimp provides a number of optional post-processing steps that can be performed on the imported model; here, we ensure that the imported model will have normal and tangent vectors by using the GenerateSmoothNormals and CalculateTangentSpace flags. If the mesh does not have normals or tangents, Assimp will calculate them for us from the mesh geometry. This function will return us an Assimp.Scene object if it was able to successfully import the model, which contains all of the mesh information.

public BasicModel(Device device, TextureManager texMgr, string filename, string texturePath) {

_subsets = new List<MeshGeometry.Subset>();

_vertices = new List<PosNormalTexTan>();

_indices = new List<short>();

DiffuseMapSRV = new List<ShaderResourceView>();

NormalMapSRV = new List<ShaderResourceView>();

Materials = new List<Material>();

_modelMesh = new MeshGeometry();

var importer = new AssimpImporter();

if (!importer.IsImportFormatSupported(Path.GetExtension(filename))) {

throw new ArgumentException("Model format "+Path.GetExtension(filename)+" is not supported! Cannot load {1}", "filename");

}

var model = importer.ImportFile(filename, PostProcessSteps.GenerateSmoothNormals | PostProcessSteps.CalculateTangentSpace);

Loading Vertex and Index Data

The subsets of the imported mesh are stored in the Meshes collection of the Scene object returned by ImportFile. To extract each subset, we’ll iterate over this collection. Our first step is to create the subset table entry. Assimp’s Mesh class exposes the number of vertices and faces in the subset for us, while the offsets into the vertex and index buffer for the current subset will be the current size of the in-memory copies of the vertex and index data . Next, we process each vertex in the imported mesh, extracting the position and texture coordinates, and the normal and tangent vectors. Once we have this data, we can create in instance of our PosNormalTexTan vertex structure, and add the vertex to a temporary list of vertices for the subset. As we read in the mesh vertices, we update our bounding box extent coordinates, so that we can then create the bounding box. After we have extracted all the vertices and stored them in our temp list, we need to add them to our in-memory copy of the full model vertex data. Finally, we extract the indices from the Assimp Mesh, convert them into shorts for our MeshGeometry class, and offset them to their proper references in our whole-model vertex buffer.

foreach (var mesh in model.Meshes) {

var verts = new List<PosNormalTexTan>();

var subset = new MeshGeometry.Subset {

VertexCount = mesh.VertexCount,

VertexStart = _vertices.Count,

FaceStart = _indices.Count / 3,

FaceCount = mesh.FaceCount

};

_subsets.Add(subset);

// bounding box corners

var min = new Vector3(float.MaxValue);

var max = new Vector3(float.MinValue);

for (var i = 0; i < mesh.VertexCount; i++) {

var pos = mesh.HasVertices ? mesh.Vertices[i].ToVector3() : new Vector3();

min = Vector3.Minimize(min, pos);

max = Vector3.Maximize(max, pos);

var norm = mesh.HasNormals ? mesh.Normals[i] : new Vector3D();

var texC = mesh.HasTextureCoords(0) ? mesh.GetTextureCoords(0)[i] : new Vector3D();

var tan = mesh.HasTangentBasis ? mesh.Tangents[i] : new Vector3D();

var v = new PosNormalTexTan(pos, norm.ToVector3(), texC.ToVector2(), tan.ToVector3());

verts.Add(v);

}

BoundingBox = new BoundingBox(min, max);

_vertices.AddRange(verts);

var indices = mesh.GetIndices().Select(i => (short)(i + (uint)subset.VertexStart)).ToList();

_indices.AddRange(indices);

One thing you may have noticed here is that we have some conversion functions (ToVector3, ToVector2) operating on the Assimp vector types. These are extension methods that we need to define in our Util.cs utility class. Assimp has its own math and Material types, which we will need to convert to their equivalent SlimDX types before we can use them in the rest of our engine. The conversion functions for the Assimp Vector, Material, Matrix4x4 and Quaternion classes are below:

public static Vector3 ToVector3(this Vector4 v) {

return new Vector3(v.X, v.Y, v.Z);

}

public static Vector3 ToVector3(this Vector3D v) {

return new Vector3(v.X, v.Y, v.Z);

}

public static Vector2 ToVector2(this Vector3D v) {

return new Vector2(v.X, v.Y);

}

public static Material ToMaterial(this Assimp.Material m) {

var ret = new Material {

Ambient = new Color4(m.ColorAmbient.A, m.ColorAmbient.R, m.ColorAmbient.G, m.ColorAmbient.B),

Diffuse = new Color4(m.ColorDiffuse.A, m.ColorAmbient.R, m.ColorAmbient.G, m.ColorAmbient.B),

Specular = new Color4(m.Shininess, m.ColorSpecular.R, m.ColorSpecular.G, m.ColorSpecular.B),

Reflect = new Color4(m.ColorReflective.A, m.ColorReflective.R, m.ColorReflective.G, m.ColorReflective.B)

};

if (ret.Ambient == new Color4(0, 0, 0, 0)) {

ret.Ambient = Color.Gray;

}

if (ret.Diffuse == new Color4(0, 0, 0, 0) || ret.Diffuse == Color.Black) {

ret.Diffuse = Color.White;

}

if (m.ColorSpecular == new Color4D(0, 0, 0, 0) || m.ColorSpecular == new Color4D(0,0,0)) {

ret.Specular = new Color4(ret.Specular.Alpha, 0.5f, 0.5f, 0.5f);

}

return ret;

}

public static Matrix ToMatrix(this Matrix4x4 m) {

var ret = Matrix.Identity;

for (int i = 0; i < 4; i++) {

for (int j = 0; j < 4; j++) {

ret[i, j] = m[i+1, j+1];

}

}

return ret;

}

public static Quaternion ToQuat(this Assimp.Quaternion q) {

return new Quaternion(q.X, q.Y, q.Z, q.W);

}

One note on the ToMaterial() function: the models that I was loading may not have had materials defined for them, so I was getting a lot of black or transparent materials in my case. This is the why behind the ifs and default values.

Loading Materials and Textures

The Assimp.Scene class stores the imported materials for a model in the Materials collection. We can access the correct material for a model subset through the Mesh.MaterialIndex property. Once we have extracted the correct material for the subset, we can convert it to our Material structure and add the result to the BasicModel.Materials list.

With Assimp, textures are associated to a material. Thus, we are not finished with the Assimp material after we have extracted the lighting properties. We can extract information about a texture using the Assimp Material.GetTexture method. We will make the assumption that the models we load only have one texture per subset, so we will always be calling GetTexture(TextureType, 0). The TextureSlot object that is returned from this call has a number of properties, but the only one that we’ll worry about for now is the FilePath, which in most cases will be the filename of the texture image source file. Once we have extracted the texture filename, we will use the aforementioned TextureManager class to load the texture as a ShaderResourceView.

Many model that you want to load may not have normal map textures baked into the model. If the normal map texture is defined by the modeling software, we will load that, otherwise we will look for an image of the same format, with the diffuse texture filename and the suffix “_nmap.” This gives us a way to specify normal maps for a model that was not exported with normal maps; the normal maps in this example are of this sort, generated from the diffuse textures using the GIMP normalmap plugin.

For whatever reason, I was not able to get .tga textures to load, so if you have models that use .tga textures, you will need to convert them to .pngs, and you should be able to load them without modifying the model file.

var mat = model.Materials[mesh.MaterialIndex];

var material = mat.ToMaterial();

Materials.Add(material);

var diffusePath = mat.GetTexture(TextureType.Diffuse, 0).FilePath;

if (Path.GetExtension(diffusePath) == ".tga") {

// DirectX doesn't like to load tgas, so you will need to convert them to pngs yourself with an image editor

diffusePath = diffusePath.Replace(".tga", ".png");

}

if (!string.IsNullOrEmpty(diffusePath)) {

DiffuseMapSRV.Add(texMgr.CreateTexture(Path.Combine(texturePath, diffusePath)));

}

var normalPath = mat.GetTexture(TextureType.Normals, 0).FilePath;

if (!string.IsNullOrEmpty(normalPath)) {

NormalMapSRV.Add(texMgr.CreateTexture(Path.Combine(texturePath, normalPath)));

} else {

var normalExt = Path.GetExtension(diffusePath);

normalPath = Path.GetFileNameWithoutExtension(diffusePath) + "_nmap" + normalExt;

NormalMapSRV.Add(texMgr.CreateTexture(Path.Combine(texturePath, normalPath)));

}

} /*end mesh foreach loop*/

As a final step, we need to set the vertex and index buffers, and the subset table for the BasicModel’s MeshGeometry object, after we have extracted the data from each subset of the Assimp Scene object that we have imported.

_modelMesh.SetSubsetTable(_subsets);

_modelMesh.SetVertices(device, _vertices);

_modelMesh.SetIndices(device, _indices);

TextureManager Class

When we are loading models, we might encounter a situation where multiple models use the same texture files. Rather than have each model load separate copies of that data, it would be better to have a central repository of texture files, in order to save memory and ensure that a texture is only loaded once. Thus, we will create a new TextureManager class. Essentially, our TextureManager encapsulates a Dictionary of (file path, texture) values, along with the necessary code to create and destroy textures.

public class TextureManager :DisposableClass {

private bool _disposed;

private Device _device;

private readonly Dictionary<string, ShaderResourceView> _textureSRVs;

public TextureManager() {

_textureSRVs = new Dictionary<string, ShaderResourceView>();

}

protected override void Dispose(bool disposing) {

if (!_disposed) {

if (disposing) {

foreach (var key in _textureSRVs.Keys) {

var shaderResourceView = _textureSRVs[key];

Util.ReleaseCom(ref shaderResourceView);

}

_textureSRVs.Clear();

}

_disposed = true;

}

base.Dispose(disposing);

}

public void Init(Device device) {

_device = device;

}

public ShaderResourceView CreateTexture(string path) {

if (!_textureSRVs.ContainsKey(path)) {

if (File.Exists(path)) {

_textureSRVs[path] = ShaderResourceView.FromFile(_device, path);

} else {

return null;

}

}

return _textureSRVs[path];

}

}

BasicModelInstanceClass

Similar to how we want to avoid duplicating the processing and memory costs of reloading the same texture multiple times, we will only want to load a given model once. However, it is very likely that we will want to display multiple copies of the model in our application. To that effect, we will create a class to represent a single instance of a model, along with the transformations necessary to locate the model instance at its correct position, size and orientation in world-space. The BasicModelInstance class is as follows:

public struct BasicModelInstance {

public BasicModel Model;

public Matrix World;

public BoundingBox BoundingBox {

get {

return new BoundingBox(

Vector3.TransformCoordinate(Model.BoundingBox.Minimum, World),

Vector3.TransformCoordinate(Model.BoundingBox.Maximum, World)

);

}

}

}

Note that to get the bounding box of a given BasicModelInstance, we have to transform the BasicModel’s BoundingBox into world space. Since we are using Axis-Aligned bounding boxes, this transformation may not be perfect if the model instance is rotated, so you will want to use a more fine-grained method of intersection testing for collision testing or picking, but this is a quick, cheap way of determining visibility for frustum culling, or for a first culling step when doing collisions.

To draw the model instance, I’ve added an additional Draw() function. This is not strictly necessary, as all of the code that occurs in the Draw() function could be run outside of it, since all of the necessary Model and ModelInstance properties are public. I just got sick of repeating the same calls to setup the shader effect variables and draw all the subsets of each mesh with slightly different values each time I needed to draw a ModelInstance. If you wanted to use a different Effect to draw the mesh than our NormalMapEffect, you would need to create another similar function to set the appropriate shader variables for that effect.

// BasicModelInstance

public void Draw(DeviceContext dc, EffectPass effectPass, Matrix viewProj) {

var world = World;

var wit = MathF.InverseTranspose(world);

var wvp = world * viewProj;

Effects.NormalMapFX.SetWorld(world);

Effects.NormalMapFX.SetWorldInvTranspose(wit);

Effects.NormalMapFX.SetWorldViewProj(wvp);

Effects.NormalMapFX.SetTexTransform(Matrix.Identity);

for (int i = 0; i < Model.SubsetCount; i++) {

Effects.NormalMapFX.SetMaterial(Model.Materials[i]);

Effects.NormalMapFX.SetDiffuseMap(Model.DiffuseMapSRV[i]);

Effects.NormalMapFX.SetNormalMap(Model.NormalMapSRV[i]);

effectPass.Apply(dc);

Model.ModelMesh.Draw(dc, i);

}

}

The Demo

The demo application showcasing the model loading and rendering code that we have just gone over is named AssimpModelDemo. As usual, we will subclass our D3DApp base class, and most of the code will be very similar to our previous examples, save the code for our Init() and DrawScene() functions. The members of the AssimpModelDemo class are:

class AssimpModelDemo : D3DApp {

private TextureManager _texMgr;

private BasicModel _treeModel;

private BasicModel _stoneModel;

private BasicModelInstance _modelInstance;

private BasicModelInstance _stoneInstance;

private readonly DirectionalLight[] _dirLights;

private readonly FpsCamera _camera;

private Point _lastMousePos;

private bool _disposed;

}

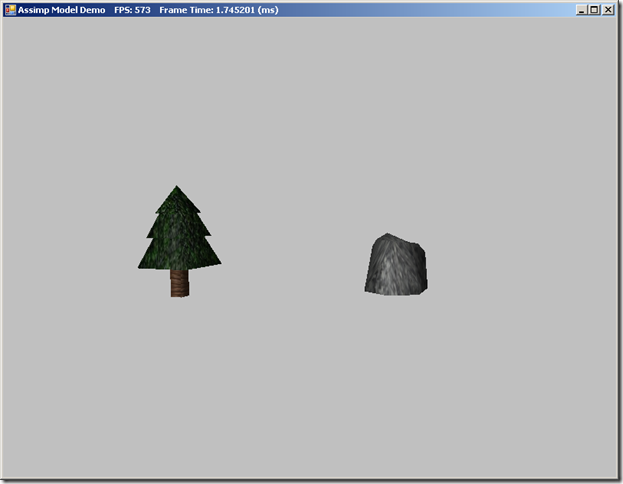

In our Init() function, we will need to create and initialize our TextureManager instance, and then load our two models and setup their instances in our world. We’re using a pair of simple, low-poly meshes, one of a spruce tree, and one of a boulder.

public override bool Init() {

if (!base.Init()) return false;

Effects.InitAll(Device);

InputLayouts.InitAll(Device);

RenderStates.InitAll(Device);

_texMgr = new TextureManager();

_texMgr.Init(Device);

_treeModel = new BasicModel(Device, _texMgr, "Models/tree.x", "Textures");

_modelInstance = new BasicModelInstance {

Model = _treeModel,

World = Matrix.RotationX(MathF.PI / 2)

};

_stoneModel = new BasicModel(Device, _texMgr, "Models/stone.x", "Textures");

_stoneInstance = new BasicModelInstance {

Model = _stoneModel,

World = Matrix.Scaling(0.1f, 0.1f, 0.1f) * Matrix.Translation(2, 0, 2)

};

return true;

}

To draw these models, we need to do our normal setup, clearing the back and depth/stencil buffers, setting up our InputAssembler, setting the global shader variables, and so forth. We’ll use our 3-light, normal-mapped, textured shader technique. Using the BasicModelInstance Draw() function, rendering the tree and stone model is very simple.

public override void DrawScene() {

base.DrawScene();

ImmediateContext.ClearRenderTargetView(RenderTargetView, Color.Silver);

ImmediateContext.ClearDepthStencilView(

DepthStencilView, DepthStencilClearFlags.Depth | DepthStencilClearFlags.Stencil, 1.0f, 0);

ImmediateContext.InputAssembler.InputLayout = InputLayouts.PosNormalTexTan;

ImmediateContext.InputAssembler.PrimitiveTopology = PrimitiveTopology.TriangleList;

_camera.UpdateViewMatrix();

var viewProj = _camera.ViewProj;

Effects.NormalMapFX.SetDirLights(_dirLights);

Effects.NormalMapFX.SetEyePosW(_camera.Position);

var activeTech = Effects.NormalMapFX.Light3TexTech;

for (int p = 0; p < activeTech.Description.PassCount; p++) {

var pass = activeTech.GetPassByIndex(p);

_modelInstance.Draw(ImmediateContext, pass, viewProj);

_stoneInstance.Draw(ImmediateContext, pass, viewProj);

}

SwapChain.Present(0, PresentFlags.None);

}

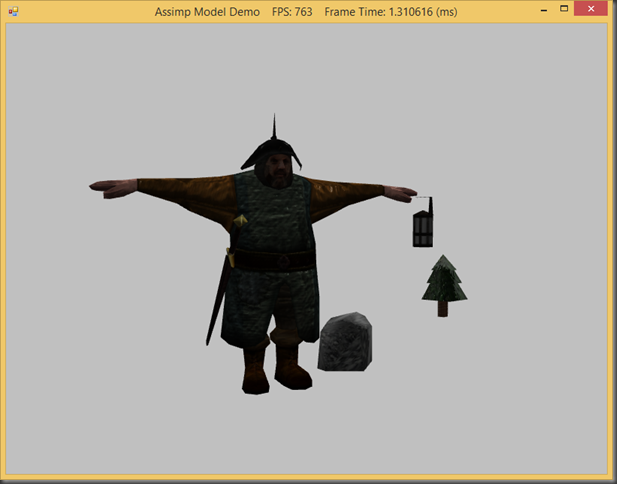

And another model, this time with multiple subsets and textures

Next Time…

Static meshes are nice to have, but to really bring our scene to life or make a game, we will need to support animations. Skeletal animation is a fairly complex topic, and I struggled for the better part of a week trying to get it right. So far as I was able to find, there aren’t many good resources on doing skeletal animation in SlimDX, and virtually none on using Assimp with C# and SlimDX, so I hope that my struggles will provide a resource for other people that are looking to go down this road.

Stay tuned…

No comments :

Post a Comment