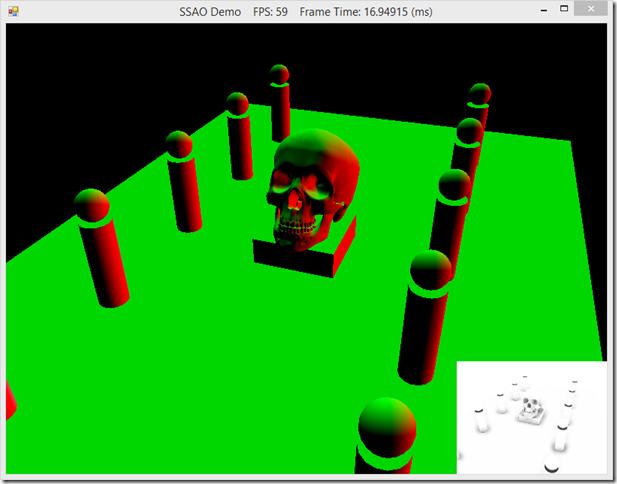

In real-time lighting applications, like games, we usually only calculate direct lighting, i.e. light that originates from a light source and hits an object directly. The Phong lighting model that we have been using thus far is an example of this; we only calculate the direct diffuse and specular lighting. We either ignore indirect light (light that has bounced off of other objects in the scene), or approximate it using a fixed ambient term. This is very fast to calculate, but not terribly physically accurate. Physically accurate lighting models can model these indirect light bounces, but are typically too computationally expensive to use in a real-time application, which needs to render at least 30 frames per second. However, using the ambient lighting term to approximate indirect light has some issues, as you can see in the screenshot below. This depicts our standard skull and columns scene, rendered using only ambient lighting. Because we are using a fixed ambient color, each object is rendered as a solid color, with no definition. Essentially, we are making the assumption that indirect light bounces uniformly onto all surfaces of our objects, which is often not physically accurate.

Naturally, some portions of our scene will receive more indirect light than other portions, if we were actually modeling the way that light bounces within our scene. Some portions of the scene will receive the maximum amount of indirect light, while other portions, such as the nooks and crannies of our skull, should appear darker, since fewer indirect light rays should be able to hit those surfaces because the surrounding geometry would, realistically, block those rays from reaching the surface.

In a classical global illumination scheme, we would simulate indirect light by casting rays from the object surface point in a hemispherical pattern, checking for geometry that would prevent light from reaching the point. Assuming that our models are static, this could be a viable method, provided we performed these calculations off-line; ray tracing is very expensive, since we would need to cast a large number of rays to produce an acceptable result, and performing that many intersection tests can be very expensive. With animated models, this method very quickly becomes untenable; whenever the models in the scene move, we would need to recalculate the occlusion values, which is simply too slow to do in real-time.

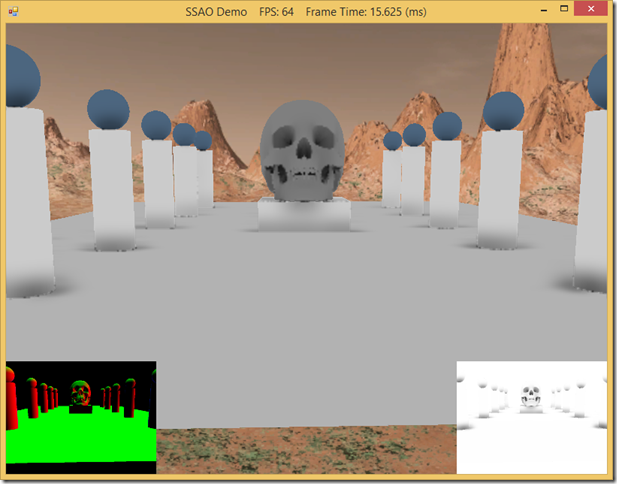

Screen-Space Ambient Occlusion is a fast technique for approximating ambient occlusion, developed by Crytek for the game Crysis. We will initially draw the scene to a render target, which will contain the normal and depth information for each pixel in the scene. Then, we can sample this normal/depth surface to calculate occlusion values for each pixel, which we will save to another render target. Finally, in our usual shader effect, we can sample this occlusion map to modify the ambient term in our lighting calculation. While this method is not perfectly realistic, it is very fast, and generally produces good results. As you can see in the screen shot below, using SSAO darkens up the cavities of the skull and around the bases of the columns and spheres, providing some sense of depth.

The code for this example is based on Chapter 22 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0. The example presented here has been stripped down considerably to demonstrate only the SSAO effects; lighting and texturing have been disabled, and the shadow mapping effects in Luna’s example have been removed. The full code for this example can be found at my GitHub repository, https://github.com/ericrrichards/dx11.git, under the SSAODemo2 project. A more faithful adaptation of Luna’s example can also be found in the 28-SsaoDemo project.

SSAO Class

Similar to our ShadowMap class, we are going to create a class to encapsulate the textures and other resources we need to use for calculating the screen-space ambient occlusion effect. Our class definition looks like this:

public class Ssao : DisposableClass {

private readonly Device _device;

private readonly DeviceContext _dc;

// full screen quad used for computing SSAO from normal/depth map

private Buffer _screenQuadVB;

private Buffer _screenQuadIB;

// texture containing random vectors for use in random sampling

private ShaderResourceView _randomVectorSRV;

// scene normal/depth texture views

private RenderTargetView _normalDepthRTV;

private ShaderResourceView _normalDepthSRV;

// occlusions maps

// need two, because we will ping-pong them to do a bilinear blur

private RenderTargetView _ambientRTV0;

private ShaderResourceView _ambientSRV0;

private RenderTargetView _ambientRTV1;

private ShaderResourceView _ambientSRV1;

// dimensions of the full screen quad

private int _renderTargetWidth;

private int _renderTargetHeight;

// far-plane corners of the view frustum, used to reconstruct position from depth

private readonly Vector4[] _frustumFarCorners = new Vector4[4];

// uniformly distributed vectors that will be randomized to sample occlusion

readonly Vector4[] _offsets = new Vector4[14];

// half-size viewport for rendering the occlusion maps

private Viewport _ambientMapViewport;

private bool _disposed;

// readonly properties to access the normal/depth and occlusion maps

public ShaderResourceView NormalDepthSRV {get { return _normalDepthSRV; }}

public ShaderResourceView AmbientSRV {get { return _ambientSRV0; }}

}

The most important members of this class are:

- _screenQuadVB &_screenQuadIB – These two buffers define a full screen quad. When we compute the SSAO effect, we will draw this quad, with the normal/depth mapped onto it.

- _randomVectorSRV – This is a texture resource that we will create and populate with random vectors. We will then use this texture as a source of random numbers in our ssao computation shader to generate randomly sampled vectors to check for occlusion.

- _normalDepthRTV & _normalDepthSRV – These shader views will be used to access our normal/depth buffer. We will render the scene to the render target view, to create a texture containing the scene normals in the RGB pixel components, and the scene depth information in the alpha component. We will bind the shader resource view of the underlying texture as an input when we compute the occlusion map.

- _ambientRTV0, _ambientSRV0, etc – These views and the underlying textures will contain our occlusion map. We need two textures, because when we apply a bilinear blur effect to smooth out the occlusion map, we will need to first blur horizontally, then ping-pong the textures and apply a vertical blur. After computing the ssao to this texture and smoothing with the blur effect, we will bind this occlusion map to our normal effects and sample the occlusion map to modify the ambient term of our lighting equations.

- _frustumFarCorners – To compute the occlusion map, we will need to reconstruct the view-space position of a screen pixel from the normal/depth map. To do this, we need the view-space positions of the corners of the far plane of our view frustum.

- _offsets – When computing the occlusion value in our ssao effect, we will need to sample points surrounding the screen pixel. To get consistent results, we will provide this array of uniformly distributed vectors, which we can modify using random values. Starting from a uniform distribution helps ensure that our random samples will more accurately represent occlusion; using only random vectors might result in only sampling in one direction.

- _ambientMapViewport – To save space and increase performance, we will be using a half-size occlusion map, so we need to create a viewport with half-size dimensions.

Creating the SSAO object

Most of the processing for our SSAO constructor is passed off to helper functions. To create the SSAO object, we need to pass in our Direct3D device, the DeviceContext, the screen dimensions, the camera fov, and the camera far plane distance.

public Ssao(Device device, DeviceContext dc, int width, int height, float fovY, float farZ) {

_device = device;

_dc = dc;

OnSize(width, height, fovY, farZ);

BuildFullScreenQuad();

BuildOffsetVectors();

BuildRandomVectorTexture();

}

OnResize

When the screen size or camera projection changes, we need to resize our normal/depth and occlusion map textures; otherwise, we will end up with strange artifacts, because the pixels sampled from these maps will not match up with the geometry rendered on screen. First, we need to resize the viewport for computing the occlusion map, so that it remains half the size of the full screen. Next, we need to recalculate the frustum far plane corner positions, to match the new screen size and possibly changing camera projection. Lastly, we need to recreate our textures, to match the new screen size and occlusion map viewport. Note that we will need to call OnSize both in the constructor, and in our application’s OnResize method, as well as any time that we modify the camera’s projection matrix, such as when we zoom in our FpsCamera.

public void OnSize(int width, int height, float fovY, float farZ) {

_renderTargetWidth = width;

_renderTargetHeight = height;

_ambientMapViewport = new Viewport(0, 0, width / 2.0f, height / 2.0f, 0, 1);

BuildFrustumFarCorners(fovY, farZ);

BuildTextureViews();

}

private void BuildFrustumFarCorners(float fovy, float farz) {

var aspect = (float)_renderTargetWidth / _renderTargetHeight;

var halfHeight = farz * MathF.Tan(0.5f * fovy);

var halfWidth = aspect * halfHeight;

_frustumFarCorners[0] = new Vector4(-halfWidth, -halfHeight, farz, 0);

_frustumFarCorners[1] = new Vector4(-halfWidth, +halfHeight, farz, 0);

_frustumFarCorners[2] = new Vector4(+halfWidth, +halfHeight, farz, 0);

_frustumFarCorners[3] = new Vector4(+halfWidth, -halfHeight, farz, 0);

}

private void BuildTextureViews() {

ReleaseTextureViews();

var texDesc = new Texture2DDescription {

Width = _renderTargetWidth,

Height = _renderTargetHeight,

MipLevels = 1,

ArraySize = 1,

Format = Format.R32G32B32A32_Float,

SampleDescription = new SampleDescription(1, 0),

Usage = ResourceUsage.Default,

BindFlags = BindFlags.ShaderResource | BindFlags.RenderTarget,

CpuAccessFlags = CpuAccessFlags.None,

OptionFlags = ResourceOptionFlags.None

};

var normalDepthTex = new Texture2D(_device, texDesc) { DebugName = "normalDepthTex" };

_normalDepthSRV = new ShaderResourceView(_device, normalDepthTex);

_normalDepthRTV = new RenderTargetView(_device, normalDepthTex);

Util.ReleaseCom(ref normalDepthTex);

texDesc.Width = _renderTargetWidth / 2;

texDesc.Height = _renderTargetHeight / 2;

texDesc.Format = Format.R16_Float;

var ambientTex0 = new Texture2D(_device, texDesc) { DebugName = "ambientTex0" };

_ambientSRV0 = new ShaderResourceView(_device, ambientTex0);

_ambientRTV0 = new RenderTargetView(_device, ambientTex0);

var ambientTex1 = new Texture2D(_device, texDesc) { DebugName = "ambientTex1" };

_ambientSRV1 = new ShaderResourceView(_device, ambientTex1);

_ambientRTV1 = new RenderTargetView(_device, ambientTex1);

Util.ReleaseCom(ref ambientTex0);

Util.ReleaseCom(ref ambientTex1);

}

To create our normal/depth texture, we will use the full screen height/width. We will use the R32G32B32A32_Float texture format. Mr. Luna’s code uses a 16 bpp format instead, however, I found that if the camera was zoomed too far away from the scene, the 16bpp format resulted in some really weird banding effects that seemed to be related to lack of precision in the depth component of the normal/depth buffer. Your mileage may vary, but I found this unacceptable when I started to use the SSAO effect on terrain, so I switched to the 32bpp format. This will double the memory required for the normal/depth map.

Below you can see some of the artifacts from using a 16bpp normal/depth map. We are quite zoomed out here, so we run into some issues with the precision in the depth buffer; it may be a little hard to see, but the upper-left corner of our floor grid has some incorrect occlusion occurring. This may not be very noticeable in all scenes, but, for instance, when I recently tried to expand one of the terrain demos to use ambient occlusion, the artifacts were very noticeable.

Our occlusion maps are created at half the screen dimensions. You could use full-screen occlusion maps, if you are noticing a lot of artifacts because of the occlusion map texels covering too many pixels in your scene, but again, this will consume more memory, and will make computing the ssao map and blurring it quite a bit slower, since you will need to process four times as many pixels. Lack of resolution on the occlusion map texture is not a huge issue, since the SSAO effect is quite subtle, especially once you’ve combined texturing and direct lighting.

Building the Fullscreen Quad

Building the full screen quad vertex and index buffers is pretty straightforward. We don’t need any fancy effects, so we will use our Basic32 vertex structure.

One thing to note here is that, rather than a real normal, we use the X portion of the vertex normal component to store an index into our far-plane corners array. This will be important later, when we implement the shader to calculate the SSAO effect, as we will need to use the correct far-plane corner to reconstruct the view-space position of a screen space pixel.

private void BuildFullScreenQuad() {

var v = new Basic32[4];

// normal.X contains frustum corner array index

v[0] = new Basic32(new Vector3(-1, -1, 0), new Vector3(0, 0, 0), new Vector2(0, 1));

v[1] = new Basic32(new Vector3(-1, +1, 0), new Vector3(1, 0, 0), new Vector2(0, 0));

v[2] = new Basic32(new Vector3(+1, +1, 0), new Vector3(2, 0, 0), new Vector2(1, 0));

v[3] = new Basic32(new Vector3(+1, -1, 0), new Vector3(3, 0, 0), new Vector2(1, 1));

var vbd = new BufferDescription(Basic32.Stride * 4, ResourceUsage.Immutable, BindFlags.VertexBuffer,

CpuAccessFlags.None, ResourceOptionFlags.None, 0);

_screenQuadVB = new Buffer(_device, new DataStream(v, false, false), vbd);

var indices = new short[] { 0, 1, 2, 0, 2, 3 };

var ibd = new BufferDescription(sizeof(short) * 6, ResourceUsage.Immutable, BindFlags.IndexBuffer,

CpuAccessFlags.None, ResourceOptionFlags.None, 0);

_screenQuadIB = new Buffer(_device, new DataStream(indices, false, false), ibd);

}

Building the Occlusion Sampling Vectors

To sample the normal/depth map and test for occlusion, we need to sample the hemisphere above the rendered pixel position. To generate these sampling vectors, we will generate a set of vectors uniformly distributed over a sphere, and then in our shader, reflect the downward facing (with respect to the pixel normal) vectors so that all of the vectors point towards the hemisphere. Starting with a uniform distribution on a sphere ensures that when we randomize the offsets, we’ll get a resulting random distribution that is somewhat uniform; since we are not taking a huge number of samples, we want to try to make sure that our samples are not too clumped up. We’ll give these offset vectors a random length, so that we sample points at varying distances from our screen pixel.

private void BuildOffsetVectors() {

// cube corners

_offsets[0] = new Vector4(+1, +1, +1, 0);

_offsets[1] = new Vector4(-1, -1, -1, 0);

_offsets[2] = new Vector4(-1, +1, +1, 0);

_offsets[3] = new Vector4(+1, -1, -1, 0);

_offsets[4] = new Vector4(+1, +1, -1, 0);

_offsets[5] = new Vector4(-1, -1, +1, 0);

_offsets[6] = new Vector4(-1, +1, -1, 0);

_offsets[7] = new Vector4(+1, -1, +1, 0);

// cube face centers

_offsets[8] = new Vector4(-1, 0, 0, 0);

_offsets[9] = new Vector4(+1, 0, 0, 0);

_offsets[10] = new Vector4(0, -1, 0, 0);

_offsets[11] = new Vector4(0, +1, 0, 0);

_offsets[12] = new Vector4(0, 0, -1, 0);

_offsets[13] = new Vector4(0, 0, +1, 0);

for (var i = 0; i < 14; i++) {

var s = MathF.Rand(0.25f, 1.0f);

var v = s * Vector4.Normalize(_offsets[i]);

_offsets[i] = v;

}

}

Creating the Random Texture

Our last step to create the SSAO object is to create a texture filled with random vectors, which we can use in our shader, since HLSL does not have a random number generator. Ideally, we would create this as an immutable texture, but I had a lot of trouble trying to initialize the texture with data using SlimDX. Hence, we create the texture as dynamic, and then lock the texture, write the random color information and then unlock the texture.

private void BuildRandomVectorTexture() {

var texDesc = new Texture2DDescription {

Width = 256,

Height = 256,

MipLevels = 1,

ArraySize = 1,

Format = Format.R8G8B8A8_UNorm,

SampleDescription = new SampleDescription(1, 0),

Usage = ResourceUsage.Dynamic,

BindFlags = BindFlags.ShaderResource,

CpuAccessFlags = CpuAccessFlags.Write,

OptionFlags = ResourceOptionFlags.None

};

var color = new List<Color4>();

for (var i = 0; i < 256; i++) {

for (var j = 0; j < 256; j++) {

var v = new Vector3(MathF.Rand(0, 1), MathF.Rand(0, 1), MathF.Rand(0, 1));

color.Add(new Color4(0, v.X, v.Y, v.Z));

}

}

var tex = new Texture2D(_device, texDesc);

var box = _dc.MapSubresource(tex, 0, MapMode.WriteDiscard, MapFlags.None);

foreach (var color4 in color) {

box.Data.Write((byte)(color4.Red*255));

box.Data.Write((byte)(color4.Green * 255));

box.Data.Write((byte)(color4.Blue * 255));

box.Data.Write((byte)(0));

}

_dc.UnmapSubresource(tex, 0);

tex.DebugName = "random ssao texture";

_randomVectorSRV = new ShaderResourceView(_device, tex);

Util.ReleaseCom(ref tex);

}

Cleaning up the SSAO object

We will once again be subclassing our Disposable class, which means that we need to provide an overridden Dispose method. Here, we’ll dispose of our quad vertex and index buffers, the random texture, and our normal/depth and occlusion textures.

protected override void Dispose(bool disposing) {

if (!_disposed) {

if (disposing) {

Util.ReleaseCom(ref _screenQuadVB);

Util.ReleaseCom(ref _screenQuadIB);

Util.ReleaseCom(ref _randomVectorSRV);

ReleaseTextureViews();

}

_disposed = true;

}

base.Dispose(disposing);

}

private void ReleaseTextureViews() {

Util.ReleaseCom(ref _normalDepthRTV);

Util.ReleaseCom(ref _normalDepthSRV);

Util.ReleaseCom(ref _ambientRTV0);

Util.ReleaseCom(ref _ambientSRV0);

Util.ReleaseCom(ref _ambientRTV1);

Util.ReleaseCom(ref _ambientSRV1);

}

Setting the Normal/Depth Render Target

Before we can render our scene to the normal/depth map, we need to bind the render target to the DeviceContext. We will provide a utility method in our SSAO class to do this, SetNormalDepthRenderTarget(). We will need to pass in our main depth/stencil buffer. After we bind the normal/depth render target, we will want to clear the texture to a default value; we use a color that has a very large alpha component, which will default us to a very far-off depth value.

public void SetNormalDepthRenderTarget(DepthStencilView dsv) {

_dc.OutputMerger.SetTargets(dsv, _normalDepthRTV);

var clearColor = new Color4(1e5f, 0.0f, 0.0f, -1.0f);

_dc.ClearRenderTargetView(_normalDepthRTV, clearColor);

}

Once the normal/depth render target is bound and cleared to a default value, we will need to render our scene normal and depth information to the texture. We’ll talk about how to do that shortly, when we cover the implementation of the demo application and the effect shaders.

Computing SSAO

We will compute the occlusion map by rendering a full screen quad, using a special shader. We’ll cover the details of the ssao shader in a bit, but for now we will focus on the helper method, ComputeSsao(), which we will implement to setup and draw this quad. Our first step is to bind our first occlusion map as a render target. We use a null depth/stencil, since we don’t care about depth for this rendering pass. Next, we clear the render target to black, and set our half-size viewport.

A normal projection matrix transforms points into the range x= [-1, 1], y=[-1,1]. We are going to use the projected quad positions as texture coordinates to sample our normal/depth map, so we need to apply a scale and translate transform to the projection matrix, to get the points into the range [0,1], [0,1]. This is our matrix T below.

Once we have computed the NDC-to-Texture transform, we just need to set our shader effect variables, bind our quad buffers, and draw the quad using the SSAO effect.

public void ComputeSsao(CameraBase camera) {

_dc.OutputMerger.SetTargets((DepthStencilView)null,_ambientRTV0);

_dc.ClearRenderTargetView(_ambientRTV0, Color.Black);

_dc.Rasterizer.SetViewports(_ambientMapViewport);

var T = Matrix.Identity;

T.M11 = 0.5f;

T.M22 = -0.5f;

T.M41 = 0.5f;

T.M42 = 0.5f;

var P = camera.Proj;

var pt = P * T;

Effects.SsaoFX.SetViewToTexSpace(pt);

Effects.SsaoFX.SetOffsetVectors(_offsets);

Effects.SsaoFX.SetFrustumCorners(_frustumFarCorners);

Effects.SsaoFX.SetNormalDepthMap(_normalDepthSRV);

Effects.SsaoFX.SetRandomVecMap(_randomVectorSRV);

var stride = Basic32.Stride;

const int Offset = 0;

_dc.InputAssembler.InputLayout = InputLayouts.Basic32;

_dc.InputAssembler.PrimitiveTopology = PrimitiveTopology.TriangleList;

_dc.InputAssembler.SetVertexBuffers(0, new VertexBufferBinding(_screenQuadVB, stride, Offset));

_dc.InputAssembler.SetIndexBuffer(_screenQuadIB, Format.R16_UInt, 0);

var tech = Effects.SsaoFX.SsaoTech;

for (int p = 0; p < tech.Description.PassCount; p++) {

tech.GetPassByIndex(p).Apply(_dc);

_dc.DrawIndexed(6, 0, 0);

}

}

Blurring the Occlusion Map

Because we are using a fairly small number of samples to compute the occlusion value for a pixel, the resulting occlusion map is quite noisy, as you can see below. One way we could improve this is to take more samples; however, to take enough samples to get a good result will often entail so much extra computation that it becomes impossible to compute the SSAO effect in real time.

Another strategy is to take the occlusion map generated with our small number of samples and apply blurring to it. If we use an edge-preserving linear blur, this will both reduce the noise present in the initial occlusion map, and fade out the edges of our occluded areas, so that we have a smoother transition between occluded and non-occluded areas. Below, you can see the result of blurring the original occlusion map four times.

To implement this blurring effect, we will provide a BlurAmbientMap() function in our SSAO class. We will implement a bilateral blur by first blurring in the horizontal dimension, then blurring in the vertical direction. To do this, we will bind the original occlusion map as an input to the horizontal blur, and render to the second occlusion map. Then, we bind the second occlusion map as input to the vertical blur, and render back to the first occlusion map. We can perform as many blurs as we want, but 3 or 4 seems to give fairly good results; fewer blurs tend to leave more noticeable noise, while more blurs tend to “wash out” the effect.

public void BlurAmbientMap(int blurCount) {

for (int i = 0; i < blurCount; i++) {

BlurAmbientMap(_ambientSRV0, _ambientRTV1, true);

BlurAmbientMap(_ambientSRV1, _ambientRTV0, false);

}

}

The private BlurAmbientMap overload performs the actual blur, by selecting the appropriate blur technique, setting the shader variables, and drawing the input texture over our quad geometry.

private void BlurAmbientMap(ShaderResourceView inputSRV, RenderTargetView outputRTV, bool horzBlur) {

_dc.OutputMerger.SetTargets(outputRTV);

_dc.ClearRenderTargetView(outputRTV, Color.Black);

_dc.Rasterizer.SetViewports(_ambientMapViewport);

Effects.SsaoBlurFX.SetTexelWidth(1.0f / _ambientMapViewport.Width);

Effects.SsaoBlurFX.SetTexelHeight(1.0f / _ambientMapViewport.Height);

Effects.SsaoBlurFX.SetNormalDepthMap(_normalDepthSRV);

Effects.SsaoBlurFX.SetInputImage(inputSRV);

var tech = horzBlur ? Effects.SsaoBlurFX.HorzBlurTech : Effects.SsaoBlurFX.VertBlurTech;

var stride = Basic32.Stride;

const int Offset = 0;

_dc.InputAssembler.InputLayout = InputLayouts.Basic32;

_dc.InputAssembler.PrimitiveTopology = PrimitiveTopology.TriangleList;

_dc.InputAssembler.SetVertexBuffers(0, new VertexBufferBinding(_screenQuadVB, stride, Offset));

_dc.InputAssembler.SetIndexBuffer(_screenQuadIB, Format.R16_UInt, 0);

for (var p = 0; p < tech.Description.PassCount; p++) {

var pass = tech.GetPassByIndex(p);

pass.Apply(_dc);

_dc.DrawIndexed(6, 0, 0);

Effects.SsaoBlurFX.SetInputImage(null);

pass.Apply(_dc);

}

}

The Demo Application

Our demo application this time is once again based on our normal skull & obelisks scene. I’ve stripped out the texturing and shadowing that was present in Luna’s example, and effectively disabled our lights, by setting their diffuse and specular colors to black, to exaggerate the ambient occlusion effect. Otherwise, the bulk of the code for this example is identical to our other demos that have used this scene, so I will only examine the new elements related to the SSAO effect.

Creating the SSAO object

We will create our SSAO helper object as part of our Init() method. Like most of our specialized graphics classes, we need to make sure that we initialize the SSAO object after we have called our base D3DApp Init() method, and initialized our Effects, InputLayouts, and RenderStates.

public override bool Init() {

if (!base.Init()) return false;

Effects.InitAll(Device);

InputLayouts.InitAll(Device);

RenderStates.InitAll(Device);

_sky = new Sky(Device, "Textures/desertcube1024.dds", 5000.0f);

_ssao = new Ssao(Device, ImmediateContext, ClientWidth, ClientHeight, _camera.FovY, _camera.FarZ);

BuildShapeGeometryBuffers();

BuildSkullGeometryBuffers();

BuildScreenQuadGeometryBuffers();

return true;

}

We will need to add some code to our demo application’s OnResize() method to handle resizing the SSAO viewports and textures, using the SSAO.OnResize() method. We need to make sure that we check whether the SSAO object has been created before trying to resize it, since the first time our demo app’s OnResize() is called will be as part of the D3DApp base class Init() method, when we are initializing DirectX.

public override void OnResize() {

base.OnResize();

_camera.SetLens(0.25f * MathF.PI, AspectRatio, 1.0f, 1000.0f);

if (_ssao != null) {

_ssao.OnSize(ClientWidth, ClientHeight, _camera.FovY, _camera.FarZ);

}

}

Drawing the Scene

Our first step in drawing our scene with SSAO is to render the scene normals and depth to our SSAO normal/depth texture. We start by clearing the depth/stencil, and set the view port. Next, we setup the normal/depth render target with our SSAO.SetNormalDepthRenderTarget() function. Then, we need to draw the scene using a special normal/depth shader. This code is extracted out into a helper function, DrawSceneToSsaoNormalDepthMap().

public override void DrawScene() {

ImmediateContext.Rasterizer.State = null;

ImmediateContext.ClearDepthStencilView(

DepthStencilView,

DepthStencilClearFlags.Depth | DepthStencilClearFlags.Stencil,

1.0f, 0

);

ImmediateContext.Rasterizer.SetViewports(Viewport);

_ssao.SetNormalDepthRenderTarget(DepthStencilView);

DrawSceneToSsaoNormalDepthMap();

In our DrawSceneToSsaoNormalDepthMap() function, we need to draw all of our scene objects, using the normal/depth shader effect. Since I expanded the BasicModel class, all of our scene objects are represented by BasicModel and BasicModelInstance objects (I’ve also factored out the skull loading code into a factory method of BasicModel), which makes our rendering code considerably more concise.

private void DrawSceneToSsaoNormalDepthMap() {

var view = _camera.View;

var proj = _camera.Proj;

var tech = Effects.SsaoNormalDepthFX.NormalDepthTech;

ImmediateContext.InputAssembler.PrimitiveTopology = PrimitiveTopology.TriangleList;

ImmediateContext.InputAssembler.InputLayout = InputLayouts.PosNormalTexTan;

for (int p = 0; p < tech.Description.PassCount; p++) {

var pass = tech.GetPassByIndex(p);

_grid.DrawSsaoDepth(ImmediateContext, pass, view, proj);

_box.DrawSsaoDepth(ImmediateContext, pass, view, proj);

foreach (var cylinder in _cylinders) {

cylinder.DrawSsaoDepth(ImmediateContext, pass, view, proj);

}

foreach (var sphere in _spheres) {

sphere.DrawSsaoDepth(ImmediateContext, pass, view, proj);

}

}

ImmediateContext.Rasterizer.State = null;

ImmediateContext.InputAssembler.InputLayout = InputLayouts.Basic32;

for (int p = 0; p < tech.Description.PassCount; p++) {

_skull.DrawSsaoDepth(ImmediateContext, tech.GetPassByIndex(p), view, proj);

}

}

The BasicModelInstance.DrawSsaoDepth() method is fairly simple, and very similar to our other draw helper methods. As we will see, the shader for rendering normals and depth records these values in view-space, so rather than using a world and world inverse transpose, we will need to pass world-view and world-inverse-transpose-view matrices.

public void DrawSsaoDepth(DeviceContext dc, EffectPass pass, Matrix view, Matrix proj) {

var world = World;

var wit = MathF.InverseTranspose(world);

var wv = world * view;

var witv = wit * view;

var wvp = world * view*proj;

Effects.SsaoNormalDepthFX.SetWorldView(wv);

Effects.SsaoNormalDepthFX.SetWorldInvTransposeView(witv);

Effects.SsaoNormalDepthFX.SetWorldViewProj(wvp);

Effects.SsaoNormalDepthFX.SetTexTransform(TexTransform);

pass.Apply(dc);

for (int i = 0; i < Model.SubsetCount; i++) {

Model.ModelMesh.Draw(dc, i);

}

}

Mea Culpa

If you’ve been following along with me, you may recall when I initially defined our MathF.InverseTranspose() function. The code originally looked like this:

// MathF.cs

public static Matrix InverseTranspose(Matrix m) {

return Matrix.Transpose(Matrix.Invert(m));

}

If you use this implementation for this example, you will not get the normals drawn correctly, and will be left scratching your head and furiously debugging. You can see an example of the incorrect results in the normal/depth map below.

It is entirely possible that I have had this wrong all along, and the resulting artifacts were simply not noticeable before, but for rendering the normal map here, it becomes very noticeable, and borks up the whole SSAO effect. What the code should do, in addition to inverting and transposing the input matrix, is zero out the translation portion of the matrix. Since we only use this type of matrix for transforming normals, it doesn’t make any sense to have a translation component; I either mistranslated Luna’s original code, or picked this function up from an early example where we only used an identity world matrix. In any case, the correct code is this:

public static Matrix InverseTranspose(Matrix m) {

var a = m;

a.M41 = a.M42 = a.M43 = 0;

a.M44 = 1;

return Matrix.Transpose(Matrix.Invert(a));

}

The Normal/Depth Texture

After drawing the scene to the normal/depth texture, the normal/depth texture will look something like this:

Green represents +Y normals, red represents +X normals, and blue (which we should almost never see), represents +Z normals. Depth is stored in the alpha channel of the texture, which we cannot see here, because this texture was rendered without alpha-blending.

Once we have rendered out the normal/depth information to texture, we need to compute the occlusion map and perform blending.

// SSAODemo.DrawScene() cont...

_ssao.ComputeSsao(_camera);

_ssao.BlurAmbientMap(4);

After computing the occlusion, we need to reset our DeviceContext with our default render target, depth/stencil and viewport before we can continue to render the scene to the backbuffer. We also need to clear the render target to our default color, and also clear the depth/stencil buffer. Mr. Luna’s code did not clear the depth/stencil, and instead used the depth already written during the normal/depth pass, along with a DepthStencilState that was set to only draw pixels that matched the existing depth value in the depth buffer. I could not get this to work reliably; some objects would occasionally fail the depth test and so not be drawn. Possibly this is some kind of depth-buffer precision issue, but rather than fight with it, I chose to just reclear the depth buffer and draw the scene with the default depth/stencil settings.

ImmediateContext.OutputMerger.SetTargets(DepthStencilView, RenderTargetView);

ImmediateContext.Rasterizer.SetViewports(Viewport);

ImmediateContext.ClearRenderTargetView(RenderTargetView, Color.Silver);

ImmediateContext.ClearDepthStencilView(DepthStencilView,

DepthStencilClearFlags.Depth | DepthStencilClearFlags.Stencil, 1.0f, 0);

Drawing our scene objects to the back buffer then proceeds largely as normal. We do need to bind the SSAO occlusion map texture to our shader effects, and create a matrix that will transform projected pixel positions into texture coordinates, as we did when computing the SSAO map. To show the difference between SSAO and normal rendering, it is possible to disable SSAO by pressing “S” on the keyboard to disable SSAO. When disabling SSAO, we bind an all-white texture instead of the occlusion map. We need to do this, because otherwise HLSL treats a null texture as black, which results in total occlusion, which is the opposite of what we are trying to achieve.

if (!Util.IsKeyDown(Keys.S)) {

Effects.BasicFX.SetSsaoMap(_ssao.AmbientSRV);

Effects.NormalMapFX.SetSsaoMap(_ssao.AmbientSRV);

} else {

Effects.BasicFX.SetSsaoMap(_texMgr.CreateTexture("Textures/white.dds"));

Effects.NormalMapFX.SetSsaoMap(_texMgr.CreateTexture("Textures/white.dds"));

}

var toTexSpace = Matrix.Scaling(0.5f, -0.5f, 1.0f) * Matrix.Translation(0.5f, 0.5f, 0);

Drawing our objects is just slightly different when using SSAO, since we need to set the projected-to-texture coordinate transform, and then in the BasicModelInstance.Draw() function, set a world-view-projection-texture matrix, using this transform and the objects WVP transform.

for (var p = 0; p < activeTech.Description.PassCount; p++) {

// draw grid

var pass = activeTech.GetPassByIndex(p);

_grid.ToTexSpace = toTexSpace;

_grid.Draw(ImmediateContext, pass, viewProj);

}

// BasicModelInstance.Draw()...

Effects.NormalMapFX.SetWorldViewProjTex(wvp*ToTexSpace);

Shader Code

The SSAO effect is the most shader-heavy effect that we have yet implemented. We will need to create new shaders, as well as modify our existing backbuffer shaders to use the occlusion map.

SsaoNormalDepth.fx

The first shader we will need to implement is the shader to write the scene view-space normals and depth to the normal/depth texture. Our vertex shader will transform the input vertices and normals from object space, into world space, and then into view space. We will also transform the input texture coordinates; although we will not actually be doing any texturing, we need the texture coordinates to sample the object’s diffuse map in order to alpha-clip transparent pixels for objects like tree billboards and fences. We also need to transform the input position into NDC space, though we do not use it for the render target, in order to allow the GPU to write to the depth/stencil buffer correctly.

Our pixel shader writes the scene normal into the RGB components of the render target pixel, and the view-space depth into the alpha channel.

We also need to create a C# wrapper class for this effect, but I will omit that, since we have already written a number of those, and there is nothing special about the wrapper for this effect, or indeed, any of the shaders we use for SSAO.

VertexOut VS(VertexIn vin)

{

VertexOut vout;

// Transform to view space.

vout.PosV = mul(float4(vin.PosL, 1.0f), gWorldView).xyz;

vout.NormalV = mul(vin.NormalL, (float3x3)gWorldInvTransposeView);

// Transform to homogeneous clip space.

vout.PosH = mul(float4(vin.PosL, 1.0f), gWorldViewProj);

// Output vertex attributes for interpolation across triangle.

vout.Tex = mul(float4(vin.Tex, 0.0f, 1.0f), gTexTransform).xy;

return vout;

}

float4 PS(VertexOut pin, uniform bool gAlphaClip) : SV_Target

{

// Interpolating normal can unnormalize it, so normalize it.

pin.NormalV = normalize(pin.NormalV);

if(gAlphaClip)

{

float4 texColor = gDiffuseMap.Sample( samLinear, pin.Tex );

clip(texColor.a - 0.1f);

}

float4 c = float4(pin.NormalV, pin.PosV.z);

return c;

}

Ssao.fx

Next, we need to write the shader which computes the occlusion factors and creates the occlusion map.

Our vertex shader is relatively straightforward. The input vertices that make up our full-screen quad are defined in NDC coordinates, so we do not need to project them; we merely need to augment the 3D positions to 4D. Likewise, we can simply pass through the texture coordinates.

The ToFarPlane member of the vertex output structure contains the frustum far-plane corner position associated with this vertex of the quad. As I mentioned earlier, the input vertex normal is not really a normal, but just an index into the array of frustum corners that we pass to the shader in a cbuffer.

struct VertexOut

{

float4 PosH : SV_POSITION;

float3 ToFarPlane : TEXCOORD0;

float2 Tex : TEXCOORD1;

};

VertexOut VS(VertexIn vin)

{

VertexOut vout;

// Already in NDC space.

vout.PosH = float4(vin.PosL, 1.0f);

// We store the index to the frustum corner in the normal x-coord slot.

vout.ToFarPlane = gFrustumCorners[vin.ToFarPlaneIndex.x].xyz;

// Pass onto pixel shader.

vout.Tex = vin.Tex;

return vout;

}

The pixel shader for the SSAO effect is fairly complicated, since we need to reconstruct the pixel’s view-space depth from its NDC coordinate, then use our offset vectors to sample the normal/depth map and determine occlusion values for each offset vector. Finally, we average the offset vector occlusion values to generate the final occlusion value for the screen pixel, and output this value to the occlusion map. Fortunately, the shader provided by Mr. Luna is quite well commented.

float4 PS(VertexOut pin, uniform int gSampleCount) : SV_Target

{

// p -- the point we are computing the ambient occlusion for.

// n -- normal vector at p.

// q -- a random offset from p.

// r -- a potential occluder that might occlude p.

// Get viewspace normal and z-coord of this pixel. The tex-coords for

// the fullscreen quad we drew are already in uv-space.

float4 normalDepth = gNormalDepthMap.SampleLevel(samNormalDepth, pin.Tex, 0.0f);

float3 n = normalDepth.xyz;

float pz = normalDepth.w;

//

// Reconstruct full view space position (x,y,z).

// Find t such that p = t*pin.ToFarPlane.

// p.z = t*pin.ToFarPlane.z

// t = p.z / pin.ToFarPlane.z

//

float3 p = (pz/pin.ToFarPlane.z)*pin.ToFarPlane;

// Extract random vector and map from [0,1] --> [-1, +1].

float3 randVec = 2.0f*gRandomVecMap.SampleLevel(samRandomVec, 4.0f*pin.Tex, 0.0f).rgb - 1.0f;

float occlusionSum = 0.0f;

// Sample neighboring points about p in the hemisphere oriented by n.

[unroll]

for(int i = 0; i < gSampleCount; ++i)

{

// Are offset vectors are fixed and uniformly distributed (so that our offset vectors

// do not clump in the same direction). If we reflect them about a random vector

// then we get a random uniform distribution of offset vectors.

float3 offset = reflect(gOffsetVectors[i].xyz, randVec);

// Flip offset vector if it is behind the plane defined by (p, n).

float flip = sign( dot(offset, n) );

// Sample a point near p within the occlusion radius.

float3 q = p + flip * gOcclusionRadius * offset;

// Project q and generate projective tex-coords.

float4 projQ = mul(float4(q, 1.0f), gViewToTexSpace);

projQ /= projQ.w;

// Find the nearest depth value along the ray from the eye to q (this is not

// the depth of q, as q is just an arbitrary point near p and might

// occupy empty space). To find the nearest depth we look it up in the depthmap.

float rz = gNormalDepthMap.SampleLevel(samNormalDepth, projQ.xy, 0.0f).a;

// Reconstruct full view space position r = (rx,ry,rz). We know r

// lies on the ray of q, so there exists a t such that r = t*q.

// r.z = t*q.z ==> t = r.z / q.z

float3 r = (rz / q.z) * q;

//

// Test whether r occludes p.

// * The product dot(n, normalize(r - p)) measures how much in front

// of the plane(p,n) the occluder point r is. The more in front it is, the

// more occlusion weight we give it. This also prevents self shadowing where

// a point r on an angled plane (p,n) could give a false occlusion since they

// have different depth values with respect to the eye.

// * The weight of the occlusion is scaled based on how far the occluder is from

// the point we are computing the occlusion of. If the occluder r is far away

// from p, then it does not occlude it.

//

float distZ = p.z - r.z;

float dp = max(dot(n, normalize(r - p)), 0.0f);

float occlusion = dp * OcclusionFunction(distZ);

occlusionSum += occlusion;

}

occlusionSum /= gSampleCount;

float access = 1.0f - occlusionSum;

// Sharpen the contrast of the SSAO map to make the SSAO affect more dramatic.

return saturate(pow(access, 4.0f));

}

// Determines how much the sample point q occludes the point p as a function

// of distZ.

float OcclusionFunction(float distZ)

{

//

// If depth(q) is "behind" depth(p), then q cannot occlude p. Moreover, if

// depth(q) and depth(p) are sufficiently close, then we also assume q cannot

// occlude p because q needs to be in front of p by Epsilon to occlude p.

//

// We use the following function to determine the occlusion.

//

//

// 1.0 -------------\

// | | \

// | | \

// | | \

// | | \

// | | \

// | | \

// ------|------|-----------|-------------|---------|--> zv

// 0 Eps z0 z1

//

float occlusion = 0.0f;

if(distZ > gSurfaceEpsilon)

{

float fadeLength = gOcclusionFadeEnd - gOcclusionFadeStart;

// Linearly decrease occlusion from 1 to 0 as distZ goes

// from gOcclusionFadeStart to gOcclusionFadeEnd.

occlusion = saturate( (gOcclusionFadeEnd-distZ)/fadeLength );

}

return occlusion;

}

SsaoBlur.fx

Our last new shader is the blur effect that smooths out the computed occlusion map. This effect performs a bilinear blur, by blurring first in the horizontal direction, then blurring in the vertical direction. We’ll use a weighted 11x11 kernel to compute the blur, with the central pixel of the kernel receiving full weight, and the weights of the outer pixels decreasing linearly. This is an edge-preserving blur, meaning that we also sample the depth/normal map for each pixel in the kernel, and we discard any samples from consideration that differ too much in depth or normal from the center pixel of the kernel. This prevents us from blurring across the discontinuities at the edges of different objects in our scene, as well as discarding improper self-occlusions within an object. Because we can potentially use less than the full number of samples, our last step is to normalize the blurred pixel by the sum of the weights of the kernel pixels that actually contributed to the final pixel value.

VertexOut VS(VertexIn vin)

{

VertexOut vout;

// Already in NDC space.

vout.PosH = float4(vin.PosL, 1.0f);

// Pass onto pixel shader.

vout.Tex = vin.Tex;

return vout;

}

float4 PS(VertexOut pin, uniform bool gHorizontalBlur) : SV_Target

{

float2 texOffset;

if(gHorizontalBlur)

{

texOffset = float2(gTexelWidth, 0.0f);

}

else

{

texOffset = float2(0.0f, gTexelHeight);

}

// The center value always contributes to the sum.

float4 color = gWeights[5]*gInputImage.SampleLevel(samInputImage, pin.Tex, 0.0);

float totalWeight = gWeights[5];

float4 centerNormalDepth = gNormalDepthMap.SampleLevel(samNormalDepth, pin.Tex, 0.0f);

for(float i = -gBlurRadius; i <=gBlurRadius; ++i)

{

// We already added in the center weight.

if( i == 0 )

continue;

float2 tex = pin.Tex + i*texOffset;

float4 neighborNormalDepth = gNormalDepthMap.SampleLevel(

samNormalDepth, tex, 0.0f);

//

// If the center value and neighbor values differ too much (either in

// normal or depth), then we assume we are sampling across a discontinuity.

// We discard such samples from the blur.

//

if( dot(neighborNormalDepth.xyz, centerNormalDepth.xyz) >= 0.8f &&

abs(neighborNormalDepth.a - centerNormalDepth.a) <= 0.2f )

{

float weight = gWeights[i+gBlurRadius];

// Add neighbor pixel to blur.

color += weight*gInputImage.SampleLevel(

samInputImage, tex, 0.0);

totalWeight += weight;

}

}

// Compensate for discarded samples by making total weights sum to 1.

return color / totalWeight;

}

Modifying our Other Shaders to Support SSAO

We will be following the same pattern to add support for SSAO to all of our display shaders, so I will only show the changes to our Basic.fx shader.

Firstly, we need to add a new element to our VertexOut structure, to contain the projective texture coordinates that map a pixel to a texel in the occlusion map.

struct VertexOut

{

float4 PosH : SV_POSITION;

float3 PosW : POSITION;

float3 NormalW : NORMAL;

float2 Tex : TEXCOORD0;

float4 ShadowPosH : TEXCOORD1;

// new element for projective texture coord in occlusion map

float4 SsaoPosH : TEXCOORD2;

};

Then, in our vertex shader (or domain shader, for our DisplacementMap.fx shader), we need to compute this new projective texture coordinate, using the object’s World-View-Projection-Texture transform.

VertexOut VS(VertexIn vin)

{

VertexOut vout;

// normal vertex shader operations...

// Generate projective tex-coords to project SSAO map onto scene.

vout.SsaoPosH = mul(float4(vin.PosL, 1.0f), gWorldViewProjTex);

return vout;

}

Finally, in the pixel shader, we need to use this projective texture coordinate to sample the occlusion map and determine the occlusion factor. We then use this occlusion factor to modify the ambient term when we compute our lighting equation.

float4 PS(VertexOut pin,

uniform int gLightCount,

uniform bool gUseTexure,

uniform bool gAlphaClip,

uniform bool gFogEnabled,

uniform bool gReflectionEnabled) : SV_Target

{

// normal pixel shader operations...

// Finish texture projection and sample SSAO map.

pin.SsaoPosH /= pin.SsaoPosH.w;

float ambientAccess = gSsaoMap.SampleLevel(samLinear, pin.SsaoPosH.xy, 0.0f).r;

// Sum the light contribution from each light source.

[unroll]

for(int i = 0; i < gLightCount; ++i)

{

float4 A, D, S;

ComputeDirectionalLight(gMaterial, gDirLights[i], pin.NormalW, toEye, A, D, S);

ambient += ambientAccess*A;

diffuse += shadow[i]*D;

spec += shadow[i]*S;

}

// continue with texturing, reflection, fog, etc

}

The Result

Here, you can clearly see the darkening effects at the base of the columns and box, as well as on the undersides of the spheres. Likewise, the crannies of the skull model are darkened. In a sense, what the SSAO effect results in is an approximation of small-scale self-shadowing. To the bottom left, you can see the normal/depth map superimposed on the scene, with the occlusion map in the bottom right.

If you’re still with me, congratulations! This is, after the skinned animation example, probably the most involved and difficult example that we have covered. Until I realized that I was doing the inverse transpose matrix calculation incorrectly, I was stumped for several days trying to get this all to work. If you have any questions, leave a comment and I’ll try my best to explain what is going on.

Next Time…

This example finishes up the last chapter that we are going to cover from Mr. Luna’s book. I have one more example from the bonus material in the book’s example code that I am going to go over next time; we’ll implement a class and effect to produce some very nice looking displacement mapped water waves, using many of the same techniques that we used for our Displacement Mapping effect.

After that, I plan on revisiting our terrain class. There are a number of cool and very useful techniques from Carl Granberg’s Programming an RTS Game with Direct3D that I would like to implement. Some things that I plan on working through off the top of my head are: A* pathfinding, mouse picking on the terrain mesh, terrain minimaps, and fog-of-war. Some other things that I would really like to get to are building in a physics engine into the budding engine that I have here, exploring some AI techniques, particularly formation movement, and adding in a networking layer.

I think my ultimate goal is to create a sort of Total War-style strategy game. Obviously that is going to be a ton of work, and far down the road, so my short term goals are just to keep adding tools to my toolkit, exploring interesting things, and blogging about them as I go. So I invite you to follow along and learn with me.

Why you do this?

ReplyDeleteImmediateContext.ClearDepthStencilView(DepthStencilView, DepthStencilClearFlags.Depth | DepthStencilClearFlags.Stencil, 1.0f, 0);

//ImmediateContext.OutputMerger.DepthStencilState = RenderStates.EqualsDSS;

//ImmediateContext.OutputMerger.DepthStencilReference = 0;

re-rendering depth buffer?