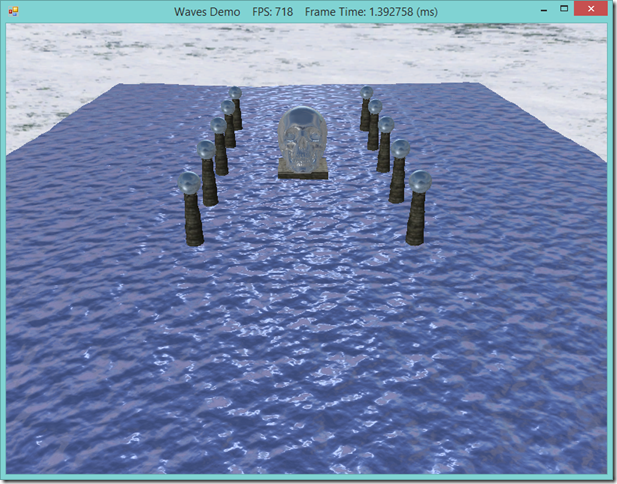

Quite a while back, I presented an example that rendered water waves by computing a wave equation and updating a polygonal mesh each frame. This method produced fairly nice graphical results, but it was very CPU-intensive, and relied on updating a vertex buffer every frame, so it had relatively poor performance.

We can use displacement mapping to approximate the wave calculation and modify the geometry all on the GPU, which can be considerably faster. At a very high level, what we will do is render a polygon grid mesh, using two height/normal maps that we will scroll in different directions and at different rates. Then, for each vertex that we create using the tessellation stages, we will sample the two heightmaps, and add the sampled offsets to the vertex’s y-coordinate. Because we are scrolling the heightmaps at different rates, small peaks and valleys will appear and disappear over time, resulting in an effect that looks like waves. Using different control parameters, we can control this wave effect, and generate either a still, calm surface, like a mountain pond at first light, or big, choppy waves, like the ocean in the midst of a tempest.

This example is based off of the final exercise of Chapter 18 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0. The original code that inspired this example is not located with the other example for Chapter 18, but rather in the SelectedCodeSolutions directory. You can download my source code in full from https://github.com/ericrrichards/dx11.git, under the 29-WavesDemo project. One thing to note is that you will need to have a DirectX 11 compatible video card to execute this example, as we will be using tessellation stage shaders that are only available in DirectX 11.