A common task for strategy and other games with an outdoor setting is the rendering of the terrain for the level. Probably the most convenient way to model a terrain is to create a triangular grid, and then perturb the y-coordinates of the vertices to match the desired elevations. This elevation data can be determined by using a mathematical function, as we have done in our previous examples, or by sampling an array or texture known as a heightmap. Using a heightmap to describe the terrain elevations allows us more fine-grain control over the details of our terrain, and also allows us to define the terrain easily, either using a procedural method to create random heightmaps, or by creating an image in a paint program.

Because a terrain can be very large, we will want to optimize the rendering of it as much as possible. One easy way to save rendering cycles is to only draw the vertices of the terrain that can be seen by the player, using frustum culling techniques similar to those we have already covered. Another way is to render the mesh using a variable level of detail, by using the Hull and Domain shaders to render the terrain mesh with more polygons near the camera, and fewer in the distance, in a manner similar to that we used for our Displacement mapping effect. Combining the two techniques allows us to render a very large terrain, with a very high level of detail, at a high frame rate, although it does limit us to running on DirectX 11 compliant graphics cards.

We will also use a technique called texture splatting to render our terrain with multiple textures in a single rendering call. This technique involves using a separate texture, called a blend map, in addition to the diffuse textures that are applied to the mesh, in order to define which texture is applied to which portion of the mesh.

The code for this example was adapted from Chapter 19 of Frank Luna’s Introduction to 3D Game Programming with Direct3D 11.0, with some additional inspirations from Chapter 4 of Carl Granberg’s Programming an RTS Game with Direct3D. The full source for this example can be downloaded from my GitHub repository, at https://github.com/ericrrichards/dx11.git, under the TerrainDemo project.

Heightmaps

A heightmap is a 2D matrix that describes the height (y-coordinate) of each vertex in our terrain mesh. We can either generate this matrix procedurally, or we can load the heightmap from an image file. One common way to store a heightmap, which we will use here, is to use a grayscale raw image, where each byte of the image represents one entry in the heightmap. Using one byte per entry gives us a resolution of 256 distinct height values; for greater resolution we could instead use a 16-bit format. Working with raw images makes loading the heightmap very simple for our code, and saves us a great deal of space; however, working with raw image data in an image editing program can be somewhat difficult. If you intend to use artist-created heightmaps, it may be more convenient to support loading a more common image format, such as jpeg or png, and using a single color channel for the heightmap data, or by pre-processing the artist-generated images into raw files as part of your art pipeline. We will follow the convention that black pixels in the heightmap image represent the lowest height values, while white represents the highest; grey values will be linearly interpolated between these min and max heights. The heightmap that we will use in this example is shown below:

Mr. Luna’s example loads the heightmap image data directly into an array in our Terrain class, however, I am going to encapsulate this heightmap data into its own class. This makes our Terrain class more simple, by offloading all of the heightmap processing code into the Heightmap class, as well as making it more easy for us to use some of the procedural heightmap creation techniques from Mr. Granberg’s book in our next example. Our heightmap class looks like this:

public class HeightMap {

private List<float> _heightMap;

public int HeightMapWidth { get; set; }

public int HeightMapHeight { get; set; }

public float MaxHeight { get; set; }

public HeightMap(int width, int height, float maxHeight) {

HeightMapWidth = width;

HeightMapHeight = height;

MaxHeight = maxHeight;

_heightMap = new List<float>(new float[HeightMapWidth*HeightMapHeight]);

}

Here we have the heightmap data, contained in our _heightmap member, as well as the dimensions of the heightmap, stored in HeightMapWidth and HeightMapHeight. MaxHeight is a factor which we will multiply the raw heightmap data by in order to generate the final heightmap heights. To provide easy access to the heightmap data contained in the HeightMap class, we will implement an indexer, which allows us to easily grab the height value at each x,y coordinate.

public float this[int row, int col] {

get {

if (InBounds(row, col)) {

return _heightMap[row * HeightMapHeight + col];

}

return 0.0f;

}

private set {

if (InBounds(row, col)) {

_heightMap[row * HeightMapHeight + col] = value;

}

}

}

The InBounds function is a simple check to make sure that we are not trying to access an element out of the range of the heightmap dimensions:

private bool InBounds(int row, int col) {

return row >= 0 && row < HeightMapHeight && col >= 0 && col < HeightMapWidth;

}

To load the heightmap image data and convert it from the raw bytes to the float heightmap data we want, we will create the LoadHeightmap() function, which takes as a parameter the path to the heightmap image file to load. We can leverage the .NET File.ReadAllBytes() method to easily read the raw image data as a byte array, which is exactly what we want when using an 8-bit raw image file. Remember that the heightmap image format we are using stores values in the [0,255] range, so to convert that data to the range we desire for our terrain, we need to normalize the raw data, then multiply each value by the MaxHeight factor.

public void LoadHeightmap(string heightMapFilename) {

var input = File.ReadAllBytes(heightMapFilename);

_heightMap = input.Select(i => (i / 255.0f * MaxHeight)).ToList();

}

As a post-processing step, we will often want to smooth the loaded heightmap, to reduce any abrupt elevation transitions due to the limited resolution of our heightmap image format, or to clean up a procedurally-generated heightmap. We smooth the heightmap by taking the average of each value and the eight surrounding values, being careful to ensure that we stay within the bounds of the heightmap:

public void Smooth() {

var dest = new List<float>();

for (var i = 0; i < HeightMapHeight; i++) {

for (var j = 0; j < HeightMapWidth; j++) {

dest.Add(Average(i, j));

}

}

_heightMap = dest;

}

private float Average(int row, int col) {

var avg = 0.0f;

var num = 0.0f;

for (var m = row - 1; m <= row + 1; m++) {

for (var n = col - 1; n <= col + 1; n++) {

if (!InBounds(m, n)) continue;

avg += _heightMap[m * HeightMapHeight + n];

num++;

}

}

return avg / num;

}

The last functionality we will require of our heightmap class is that it provide a ShaderResourceView representing its heightmap values. Because we are going to use the Hull and Domain shaders to dynamically tessellate the terrain mesh, we will need to sample this heightmap shader texture to determine the y-coordinates of our generated vertices. The first step in this process is to create a DirectX texture from our _heightmap data. Note that we convert the 32-bit float values used by the HeightMap class into 16-bit halfs when creating the texture, in order to save VRAM. Also, note that we specify that the texture only has a single mipmap level; for best results, we will want to always sample the full-resolution heightmap texture in our shader, and not storing mipmaps also saves us some memory.

public ShaderResourceView BuildHeightmapSRV(Device device) {

var texDec = new Texture2DDescription {

ArraySize = 1,

BindFlags = BindFlags.ShaderResource,

CpuAccessFlags = CpuAccessFlags.None,

Format = Format.R16_Float,

SampleDescription = new SampleDescription(1, 0),

Height = HeightMapHeight,

Width = HeightMapWidth,

MipLevels = 1,

OptionFlags = ResourceOptionFlags.None,

Usage = ResourceUsage.Default

};

var hmap = Half.ConvertToHalf(_heightMap.ToArray());

var hmapTex = new Texture2D(

device,

texDec,

new DataRectangle(

HeightMapWidth * Marshal.SizeOf(typeof(Half)),

new DataStream(hmap.ToArray(), false, false)

)

);

var srvDesc = new ShaderResourceViewDescription {

Format = texDec.Format,

Dimension = ShaderResourceViewDimension.Texture2D,

MostDetailedMip = 0,

MipLevels = -1

};

var srv = new ShaderResourceView(device, hmapTex, srvDesc);

Util.ReleaseCom(ref hmapTex);

return srv;

}

Terrain Class

Since a terrain can be very large, rendering it in its entirety, at full detail, every frame can be very expensive. Consequently, we will want to limit the number of triangles we render as much as possible when we draw our terrain. The simplest method is to use camera frustum culling to reject portions of the mesh that are not visible from being rendered at all. Typically, this requires that the terrain mesh be carved up into rectangular patches, with each patch possessing a bounding box that we can test against the camera frustum for intersection.

Even after frustum culling, rendering the visible portions of the heightmap at their full vertex resolution requires drawing a very large number of triangles. Ideally, we would like to render our terrain at a vertex resolution that enables us to capture every dip and rise in the landscape. However, let’s say that we are rendering a terrain that is one square kilometer, and we want to space our vertices such that there are ten per meter. Rendering the entire terrain would thus require rendering 200,000,000 triangles! Even after reducing that number by using frustum culling, we are left with too many triangles to render each frame and have any processing time left over for rendering anything else, or performing any of the other logic necessary for a game.

Realistically, we do not need to render all of these triangles. We will get relatively more bang for our GPU buck by rendering the closest triangles at full resolution, and combining a number of far-away triangles into a single, larger triangle. This technique of dynamically tessellating the terrain mesh gives us a good tradeoff between rendering a high-detail mesh, and rendering the mesh quickly.

Our Terrain class will use both frustum culling and dynamic tessellation. For best performance, we are going to implement these techniques using the Hull and Domain shaders on the GPU. This does limit us to rendering using DX11 compliant graphics cards; to support older DX9 and 10 cards, we would need to perform these operations on the CPU, which I may cover in the future, but we’ll ignore for right now (See Chapter 5 of Granberg’s book for a DX9 implementation of frustum culling on the CPU).

Our terrain class will not manage the actual vertices of the mesh itself; we’ll be creating these vertices using the GPU’s tessellation stages. Instead, we will maintain a vertex buffer of control points that define the rectangular patches of the terrain mesh. Four control points will define a quad patch, which we will subdivide using the tessellation stages into triangles, according to a level-of-detail calculation. Since the maximum subdivision factor supported by Direct3D 11 is 64, we will create one patch for each 64x64 chunk of our heightmap.

Since we are doing our frustum culling with the Hull shader as well, we will store the min/max y-coordinates of the patch in the upper left control point of each patch. Using these min/max y-coordinates, and the positions of the four control points, we can generate a bounding box for the patch which we can then test for intersection with the planes of the camera frustum.

Terrain Control Point Vertex Format

The vertex format for our terrain control point vertex buffer is as follows:

public struct TerrainCP {

public Vector3 Pos;

public Vector2 Tex;

public Vector2 BoundsY;

public TerrainCP(Vector3 pos, Vector2 tex, Vector2 boundsY) {

Pos = pos;

Tex = tex;

BoundsY = boundsY;

}

public static readonly int Stride = Marshal.SizeOf(typeof(TerrainCP));

}

public static class InputLayoutDescriptions {

public static readonly InputElement[] TerrainCP = {

new InputElement("POSITION", 0, Format.R32G32B32_Float, 0, 0, InputClassification.PerVertexData, 0),

new InputElement("TEXCOORD", 0, Format.R32G32_Float, InputElement.AppendAligned, 0, InputClassification.PerVertexData, 0),

new InputElement("TEXCOORD", 1, Format.R32G32_Float, InputElement.AppendAligned, 0, InputClassification.PerVertexData, 0),

};

}

Our Terrain class is below, showing its member variables. Because the terrain class manages its own vertex and index buffer, as well as the ShaderResourceViews for its heightmap, diffuse map and blend map textures, we will subclass our DisposableClass base class, so that we can explicitly destruct the unmanaged SlimDX resources.

public class Terrain :DisposableClass {

private const int CellsPerPatch = 64;

private Buffer _quadPatchVB;

private Buffer _quadPatchIB;

private ShaderResourceView _layerMapArraySRV;

private ShaderResourceView _blendMapSRV;

private ShaderResourceView _heightMapSRV;

private InitInfo _info;

private int _numPatchVertices;

private int _numPatchQuadFaces;

// number of rows of patch control point vertices

private int _numPatchVertRows;

// number of columns of patch control point vertices

private int _numPatchVertCols;

public Matrix World { get; set; }

private Material _material;

// computed Y bounds for each patch

private List<Vector2> _patchBoundsY;

private HeightMap _heightMap;

private bool _disposed;

// functions omitted

}

Our constructor and Dispose methods for the Terrain class are relatively simple. Our constructor sets the Terrain’s world matrix to the identity matrix, and initializes a default material that we will use to render the terrain, if the user does not specify one. Our dispose method releases all of the Terrain class’s unmanaged DirectX resources.

public Terrain() {

World = Matrix.Identity;

_material = new Material {

Ambient = Color.White,

Diffuse = Color.White,

Specular = new Color4(64.0f, 0, 0, 0),

Reflect = Color.Black

};

}

protected override void Dispose(bool disposing) {

if (!_disposed) {

if (disposing) {

Util.ReleaseCom(ref _quadPatchVB);

Util.ReleaseCom(ref _quadPatchIB);

Util.ReleaseCom(ref _layerMapArraySRV);

Util.ReleaseCom(ref _blendMapSRV);

Util.ReleaseCom(ref _heightMapSRV);

}

_disposed = true;

}

base.Dispose(disposing);

}

Initializing the Terrain

To actually initialize the Terrain class, we will create the Init() function. This function takes as a parameter an InitInfo structure, which instructs the Terrain class how to build the terrain. This method creates the terrain HeightMap, calculates the y-bounds of each patch, creates our vertex and index buffers, and loads the terrain textures. For now, this method only supports creating a terrain from predefined heightmap and blendmap images; next time we will look at how to create procedural textures to use instead. Note that we store the diffuse maps as a texture array, as this simplifies our shader code later.

public void Init(Device device, DeviceContext dc, InitInfo info) {

_info = info;

_numPatchVertRows = ((_info.HeightMapHeight - 1)/CellsPerPatch) + 1;

_numPatchVertCols = ((_info.HeightMapWidth - 1)/CellsPerPatch) + 1;

_numPatchVertices = _numPatchVertRows*_numPatchVertCols;

_numPatchQuadFaces = (_numPatchVertRows - 1)*(_numPatchVertCols - 1);

if (_info.Material.HasValue) {

_material = _info.Material.Value;

}

_heightMap = new HeightMap(_info.HeightMapWidth, _info.HeightMapHeight, _info.HeightScale);

if (!string.IsNullOrEmpty(_info.HeightMapFilename)) {

_heightMap.LoadHeightmap(_info.HeightMapFilename);

_heightMap.Smooth();

} else {

throw new NotImplementedException("Procedural heightmaps not yet supported");

}

CalcAllPatchBoundsY();

BuildQuadPatchVB(device);

BuildQuadPatchIB(device);

_heightMapSRV = _heightMap.BuildHeightmapSRV(device);

var layerFilenames = new List<string> {

_info.LayerMapFilename0 ?? "textures/null.bmp",

_info.LayerMapFilename1 ?? "textures/null.bmp",

_info.LayerMapFilename2 ?? "textures/null.bmp",

_info.LayerMapFilename3 ?? "textures/null.bmp",

_info.LayerMapFilename4 ?? "textures/null.bmp"

};

_layerMapArraySRV = Util.CreateTexture2DArraySRV(device, dc, layerFilenames.ToArray(), Format.R8G8B8A8_UNorm);

if (!string.IsNullOrEmpty(_info.BlendMapFilename)) {

_blendMapSRV = ShaderResourceView.FromFile(device, _info.BlendMapFilename);

} else {

throw new NotImplementedException("Procedural blendmaps not yet supported");

}

}

InitInfo Structure

The InitInfo structure allows us to specify the textures used to create the terrain, as well as parameters needed to initialize the heightmap. We can override the default terrain material if we desire by populating the Material field. Lastly, the CellSpacing field determines the distance between fully-tessellated vertices in the terrain mesh.

public struct InitInfo {

// RAW heightmap image file

public string HeightMapFilename;

// Heightmap maximum height

public float HeightScale;

// Heightmap dimensions

public int HeightMapWidth;

public int HeightMapHeight;

// terrain diffuse textures

public string LayerMapFilename0;

public string LayerMapFilename1;

public string LayerMapFilename2;

public string LayerMapFilename3;

public string LayerMapFilename4;

// Blend map which indicates which diffuse map is

// applied which portions of the terrain

public string BlendMapFilename;

// The distance between vertices in the generated mesh

public float CellSpacing;

public Material? Material;

}

Calculating the Y-Extents for Each Patch

To implement camera frustum culling, we need to calculate a bounding box for each patch in the Hull shader. We know the X and Z extents of the bounding box from the patch control points, but will still need the Y extents. We could sample the heightmap texture to determine the min/max y-coordinates for the patch in the Hull shader, but this would be inefficient, as we would be calculating this every frame, for every patch, when, assuming our terrain is static, these bounding Y-extents never change. Instead, we will calculate the Y-extents for each patch once, and cache them. Later, when we construct the patch vertex buffer, we will store these extents for each patch in the upper-left control point. The CalcAllPatchBoundsY() function simply loops through all of the patches in the terrain mesh, and then, for each patch, finds the minimum and maximum heightmap values.

private void CalcAllPatchBoundsY() {

_patchBoundsY = new List<Vector2>(new Vector2[_numPatchQuadFaces]);

for (var i = 0; i < _numPatchVertRows-1; i++) {

for (var j = 0; j < _numPatchVertCols-1; j++) {

CalcPatchBoundsY(i, j);

}

}

}

private void CalcPatchBoundsY(int i, int j) {

var x0 = j*CellsPerPatch;

var x1 = (j + 1)*CellsPerPatch;

var y0 = i*CellsPerPatch;

var y1 = (i + 1)*CellsPerPatch;

var minY = float.MaxValue;

var maxY = float.MinValue;

for (var y = y0; y <= y1; y++) {

for (var x = x0; x <= x1; x++) {

minY = Math.Min(minY, _heightMap[y,x]);

maxY = Math.Max(maxY, _heightMap[y,x]);

}

}

var patchID = i*(_numPatchVertCols - 1) + j;

_patchBoundsY[patchID] = new Vector2(minY, maxY);

}

Building the Patch Vertex Buffer

Once we have calculated the patch y-extents, we have all of the information necessary to create the patch vertex buffer. Most of this code is very similar to the code we used to create a grid vertex buffer in our GeometryGenerator class. One added step here is that we need to add the Y-extents to the upper-left corner control point of each patch after we create the patch vertices, which will help us construct a bounding box for the patch in the Hull shader. If we visualize the patch vertex buffer as a 2D array, the last row and column should have the default ((0,0)) values for their BoundsY members. After adding the Y-extents, we then create the vertex buffer as per our normal procedure.

private void BuildQuadPatchVB(Device device) {

var patchVerts = new Vertex.TerrainCP[_numPatchVertices];

var halfWidth = 0.5f*Width;

var halfDepth = 0.5f*Depth;

var patchWidth = Width/(_numPatchVertCols - 1);

var patchDepth = Depth/(_numPatchVertRows - 1);

var du = 1.0f/(_numPatchVertCols - 1);

var dv = 1.0f/(_numPatchVertRows - 1);

for (int i = 0; i < _numPatchVertRows; i++) {

var z = halfDepth - i*patchDepth;

for (int j = 0; j < _numPatchVertCols; j++) {

var x = -halfWidth + j*patchWidth;

var vertId = i * _numPatchVertCols + j;

patchVerts[vertId]= new Vertex.TerrainCP(

new Vector3(x, 0, z),

new Vector2(j*du, i*dv),

new Vector2()

);

}

}

for (int i = 0; i < _numPatchVertRows-1; i++) {

for (int j = 0; j < _numPatchVertCols-1; j++) {

var patchID = i * (_numPatchVertCols - 1) + j;

var vertID = i * _numPatchVertCols + j;

patchVerts[vertID].BoundsY = _patchBoundsY[patchID];

}

}

var vbd = new BufferDescription(

Vertex.TerrainCP.Stride*patchVerts.Length,

ResourceUsage.Immutable,

BindFlags.VertexBuffer,

CpuAccessFlags.None,

ResourceOptionFlags.None, 0

);

_quadPatchVB = new Buffer(

device,

new DataStream(patchVerts, false, false),

vbd

);

}

In the above function, we also made use of two read-only properties, Width and Depth, which return the world-space dimensions of the terrain mesh:

public float Width { get { return (_info.HeightMapWidth - 1)*_info.CellSpacing; } }

public float Depth { get { return (_info.HeightMapHeight - 1)*_info.CellSpacing; } }

Constructing the Quad Patch Index Buffer

The process to create the index buffer for the Terrain is also very similar to our earlier grid creation code. However, here, we will construct a quad, using four indices, rather than two triangles, with six.

private void BuildQuadPatchIB(Device device) {

var indices = new List<int>();

for (int i = 0; i < _numPatchVertRows-1; i++) {

for (int j = 0; j < _numPatchVertCols; j++) {

indices.Add(i*_numPatchVertCols+j);

indices.Add(i * _numPatchVertCols + j + 1);

indices.Add((i+1) * _numPatchVertCols + j);

indices.Add((i+1) * _numPatchVertCols + j + 1);

}

}

var ibd = new BufferDescription(

sizeof (short)*indices.Count,

ResourceUsage.Immutable,

BindFlags.IndexBuffer,

CpuAccessFlags.None,

ResourceOptionFlags.None, 0

);

_quadPatchIB = new Buffer(

device,

new DataStream(indices.Select(i=>(short)i).ToArray(), false, false),

ibd

);

}

Creating the Terrain Textures

We will use the previously implemented HeightMap.BuildHeightmapSRV() function to create the heightmap texture that we will bind to our shader. The blendmap will be created using the simple ShaderResource.FromFile() method, which we have used before, while the diffuse maps will be loaded as a texture array, using our Util.CreateTexture2DArraySRV() function.

Drawing the Terrain

To draw the terrain, we will create a new effect shader, Terrain.fx. This effect will be specialized to draw our Terrain. We will start by examining the constant data for the shader effect.

cbuffer cbPerFrame

{

DirectionalLight gDirLights[3];

float3 gEyePosW;

float gFogStart;

float gFogRange;

float4 gFogColor;

// When distance is minimum, the tessellation is maximum.

// When distance is maximum, the tessellation is minimum.

float gMinDist;

float gMaxDist;

// Exponents for power of 2 tessellation. The tessellation

// range is [2^(gMinTess), 2^(gMaxTess)]. Since the maximum

// tessellation is 64, this means gMaxTess can be at most 6

// since 2^6 = 64.

float gMinTess;

float gMaxTess;

float gTexelCellSpaceU;

float gTexelCellSpaceV;

float gWorldCellSpace;

float2 gTexScale = 66.0f;

float4 gWorldFrustumPlanes[6];

};

cbuffer cbPerObject

{

// Terrain coordinate specified directly

// at center of world space.

float4x4 gViewProj;

Material gMaterial;

};

// Nonnumeric values cannot be added to a cbuffer.

Texture2DArray gLayerMapArray;

Texture2D gBlendMap;

Texture2D gHeightMap;

The first portion of our per-frame constant buffer should look familiar. We have the directional lights and camera position we have used repeatedly in our other effects. Next, we have variables to control the distance fog effect, which we have covered earlier. Following these variables, we have parameters to control the level-of-detail tessellation that we will use in the hull shader, similar to those we used for displacement mapping. After that, we have two variables, gTexelCellSpaceU and gTexelCellSpaceV, which indicate the difference between heightmap values in normalized texture space [0,1], and another variable, gWorldCellSpace, which indicates the world-space distance between each vertex; we will use these parameters to help us construct normal vectors for each tessellated vertex in the pixel shader. The last element of our per-frame constant buffer is an array of planes which define the camera frustum in world-space, gWorldFrustumPlanes, which we will need for frustum culling. The camera view-proj matrix, material, and texture variables should be self-explanatory.

Terrain Vertex Shader

The vertex shader for our Terrain effect is not quite a simple pass-through shader, although it is very close. The only modification we will make to the input quad patch control point is to replace the 0 y-coordinate of the position with the elevation sampled from the heightmap texture. Be sure to only sample the red channel of the heightmap texture; remember that we specified the heightmap texture to be R16_Float format. Note that we are making the assumption that the terrain is defined in world space, so there is no world matrix to offset the input position.

SamplerState samHeightmap

{

Filter = MIN_MAG_LINEAR_MIP_POINT;

AddressU = CLAMP;

AddressV = CLAMP;

};

struct VertexIn

{

float3 PosL : POSITION;

float2 Tex : TEXCOORD0;

float2 BoundsY : TEXCOORD1;

};

struct VertexOut

{

float3 PosW : POSITION;

float2 Tex : TEXCOORD0;

float2 BoundsY : TEXCOORD1;

};

VertexOut VS(VertexIn vin)

{

VertexOut vout;

// Terrain specified directly in world space.

vout.PosW = vin.PosL;

// Displace the patch corners to world space. This is to make

// the eye to patch distance calculation more accurate.

vout.PosW.y = gHeightMap.SampleLevel( samHeightmap, vin.Tex, 0 ).r;

// Output vertex attributes to next stage.

vout.Tex = vin.Tex;

vout.BoundsY = vin.BoundsY;

return vout;

}

Terrain Hull Shader and Tessellation

Our hull shader proper is just a simple pass-through shader; we will not make any changes to the position or texture coordinates of the patch control points. We do not pass through the y-coordinate bounds of the control point, as these will only be used by the hull shader constant function. We instruct the tessellation stage that we will be using the quad tessellation algorithm, and that we will use the fractional_even tessellation scheme. You may want to read this previous post, if you are unfamiliar with what that entails.

struct HullOut

{

float3 PosW : POSITION;

float2 Tex : TEXCOORD0;

};

[domain("quad")]

[partitioning("fractional_even")]

[outputtopology("triangle_cw")]

[outputcontrolpoints(4)]

[patchconstantfunc("ConstantHS")]

[maxtessfactor(64.0f)]

HullOut HS(InputPatch<VertexOut, 4> p,

uint i : SV_OutputControlPointID,

uint patchId : SV_PrimitiveID)

{

HullOut hout;

// Pass through shader.

hout.PosW = p[i].PosW;

hout.Tex = p[i].Tex;

return hout;

}

Hull Patch Constant Function

Remember, that the Hull patch constant function is evaluated once for each patch. It is here that we will perform our frustum culling tests, as well as determine the dynamic tessellation factors for the patch, based on the distance of the patch control points from the camera position. For the frustum test, we construct an aligned bounding box for the patch, using the center and extents representation, from the patch control point positions in the X and Z axes, and from the Y-extents previously calculated in the Terrain class and stored in the first patch control point.

struct PatchTess

{

float EdgeTess[4] : SV_TessFactor;

float InsideTess[2] : SV_InsideTessFactor;

};

PatchTess ConstantHS(InputPatch<VertexOut, 4> patch, uint patchID : SV_PrimitiveID)

{

PatchTess pt;

//

// Frustum cull

//

// We store the patch BoundsY in the first control point.

float minY = patch[0].BoundsY.x;

float maxY = patch[0].BoundsY.y;

// Build axis-aligned bounding box. patch[2] is lower-left corner

// and patch[1] is upper-right corner.

float3 vMin = float3(patch[2].PosW.x, minY, patch[2].PosW.z);

float3 vMax = float3(patch[1].PosW.x, maxY, patch[1].PosW.z);

float3 boxCenter = 0.5f*(vMin + vMax);

float3 boxExtents = 0.5f*(vMax - vMin);

if( AabbOutsideFrustumTest(boxCenter, boxExtents, gWorldFrustumPlanes) )

{

pt.EdgeTess[0] = 0.0f;

pt.EdgeTess[1] = 0.0f;

pt.EdgeTess[2] = 0.0f;

pt.EdgeTess[3] = 0.0f;

pt.InsideTess[0] = 0.0f;

pt.InsideTess[1] = 0.0f;

return pt;

}

//

// Do normal tessellation based on distance.

//

else

{

// It is important to do the tess factor calculation based on the

// edge properties so that edges shared by more than one patch will

// have the same tessellation factor. Otherwise, gaps can appear.

// Compute midpoint on edges, and patch center

float3 e0 = 0.5f*(patch[0].PosW + patch[2].PosW);

float3 e1 = 0.5f*(patch[0].PosW + patch[1].PosW);

float3 e2 = 0.5f*(patch[1].PosW + patch[3].PosW);

float3 e3 = 0.5f*(patch[2].PosW + patch[3].PosW);

float3 c = 0.25f*(patch[0].PosW + patch[1].PosW + patch[2].PosW + patch[3].PosW);

pt.EdgeTess[0] = CalcTessFactor(e0);

pt.EdgeTess[1] = CalcTessFactor(e1);

pt.EdgeTess[2] = CalcTessFactor(e2);

pt.EdgeTess[3] = CalcTessFactor(e3);

pt.InsideTess[0] = CalcTessFactor(c);

pt.InsideTess[1] = pt.InsideTess[0];

return pt;

}

}

If the patch bounding box is behind any of the frustum planes, we cull the patch by setting the tessellation factors to 0; this will cause the tessellation stage to create no vertices for the patch.

// Returns true if the box is completely behind (in negative half space) of plane.

bool AabbBehindPlaneTest(float3 center, float3 extents, float4 plane)

{

float3 n = abs(plane.xyz);

// This is always positive.

float r = dot(extents, n);

// signed distance from center point to plane.

float s = dot( float4(center, 1.0f), plane );

// If the center point of the box is a distance of e or more behind the

// plane (in which case s is negative since it is behind the plane),

// then the box is completely in the negative half space of the plane.

return (s + r) < 0.0f;

}

// Returns true if the box is completely outside the frustum.

bool AabbOutsideFrustumTest(float3 center, float3 extents, float4 frustumPlanes[6])

{

for(int i = 0; i < 6; ++i)

{

// If the box is completely behind any of the frustum planes

// then it is outside the frustum.

if( AabbBehindPlaneTest(center, extents, frustumPlanes[i]) )

{

return true;

}

}

return false;

}

We calculate the tessellation factors for non-culled patches according to the distance from the camera to the midpoint of the patch edge, for the edge tessellation factors, and the center of the patch, for the interior tessellation factors. We then normalize the distance to the min/max tessellation range specified by our gMinDist and gMaxDist constants, clamping the factor to the [0,1] range using the saturate HLSL function. We determine the final tessellation factor by interpolating between the maximum and minimum tessellation factors using the normalized distance and using this value as the exponent for a power of two calculation. This exponential calculation limits us to min and max tessellation factors between [0,6] (2^6 = 64, the maximum tessellation factor in DirectX 11, 2^0 = 1), but gives us a nice transition between levels of detail, since according to this formula, each level of detail doubles the number of subdivisions the tessellation stage will produce.

float CalcTessFactor(float3 p)

{

float d = distance(p, gEyePosW);

// max norm in xz plane (useful to see detail levels from a bird's eye).

//float d = max( abs(p.x-gEyePosW.x), abs(p.z-gEyePosW.z) );

float s = saturate( (d - gMinDist) / (gMaxDist - gMinDist) );

return pow(2, (lerp(gMaxTess, gMinTess, s)) );

}

Terrain Domain Shader

After the tessellation stage, each generated vertex is submitted to the domain shader. Our domain shader uses bilinear interpolation to calculate the generated vertex’s position and texture coordinates from the four control points of the patch. We then sample the heightmap texture to determine the y-coordinate (height) of the vertex, then project the resulting world position into homogeneous clip space using the camera’s view/projection matrix. We also calculate a tiled texture coordinate, which will be used to sample the diffuse maps in the pixel shader. We need to retain the original texture coordinates, in order to sample the blend map, but adding this additional set of scaled texture coordinates, and using a texture sampler with WRAP address modes allows us to repeat the diffuse map texture many times across the terrain mesh, which helps us retain high texture detail, without using prohibitively large textures.

|

| If we use the original texture coordinates for the diffuse textures, the texture becomes very blurry due to magnification. |

Terrain Pixel Shader

Finally, we’ve reached the pixel shader. The pixel shader for our terrain effect shares many similarities with the pixel shader we used previously for our other (Basic, NormalMap, DisplacementMap) effects, since it uses the same Phong lighting model and fog techniques. We will need to calculate the texture color differently, since we are using multi-texturing, rather than a single texture. We will also need to calculate the normals in the pixel shader, since we did not calculate them for each vertex in the domain shader. Luna makes a note that, because of the dynamic tessellation, he was unable to achieve acceptable results by calculating the normal in the domain shader, as the tessellated positions of the vertices were not stable, and so produced artifacts as the normals changed from frame to frame.

To calculate the pixel normal, we will use the central differences method, which we can use to create an estimated tangent basis. To do this, we need to sample the heightmap values above, below, left and right of the pixel. This is where the gTexelCellSpaceU and V shader constants come in, as they hold the U and V axis scales of a single pixel in the heightmap. Once we have estimated the tangent and bitangent using central differences, we compute the normal as the cross-product of these two vectors.

To texture the pixel, we need to sample each of the diffuse maps in our diffuse map array, using the tiled texture coordinate created by the domain shader. Next, we sample the blend map, and linearly interpolate between the diffuse colors according to the color channels of the blend map sample. Using a four-channel blend map allows us to use five diffuse textures, using the method shown; we assume that the base texture will be applied in the absence of any influence from the four textures associated with the channels of the blend map. If more diffuse textures were required, we could use multiple blend maps, or segment the range of the channels in the blend map.

SamplerState samLinear

{

Filter = MIN_MAG_MIP_LINEAR;

AddressU = WRAP;

AddressV = WRAP;

};

float4 PS(DomainOut pin,

uniform int gLightCount,

uniform bool gFogEnabled) : SV_Target

{

//

// Estimate normal and tangent using central differences.

//

float2 leftTex = pin.Tex + float2(-gTexelCellSpaceU, 0.0f);

float2 rightTex = pin.Tex + float2(gTexelCellSpaceU, 0.0f);

float2 bottomTex = pin.Tex + float2(0.0f, gTexelCellSpaceV);

float2 topTex = pin.Tex + float2(0.0f, -gTexelCellSpaceV);

float leftY = gHeightMap.SampleLevel( samHeightmap, leftTex, 0 ).r;

float rightY = gHeightMap.SampleLevel( samHeightmap, rightTex, 0 ).r;

float bottomY = gHeightMap.SampleLevel( samHeightmap, bottomTex, 0 ).r;

float topY = gHeightMap.SampleLevel( samHeightmap, topTex, 0 ).r;

float3 tangent = normalize(float3(2.0f*gWorldCellSpace, rightY - leftY, 0.0f));

float3 bitan = normalize(float3(0.0f, bottomY - topY, -2.0f*gWorldCellSpace));

float3 normalW = cross(tangent, bitan);

// The toEye vector is used in lighting.

float3 toEye = gEyePosW - pin.PosW;

// Cache the distance to the eye from this surface point.

float distToEye = length(toEye);

// Normalize.

toEye /= distToEye;

//

// Texturing

//

// Sample layers in texture array.

float4 c0 = gLayerMapArray.Sample( samLinear, float3(pin.TiledTex, 0.0f) );

float4 c1 = gLayerMapArray.Sample( samLinear, float3(pin.TiledTex, 1.0f) );

float4 c2 = gLayerMapArray.Sample( samLinear, float3(pin.TiledTex, 2.0f) );

float4 c3 = gLayerMapArray.Sample( samLinear, float3(pin.TiledTex, 3.0f) );

float4 c4 = gLayerMapArray.Sample( samLinear, float3(pin.TiledTex, 4.0f) );

// Sample the blend map.

float4 t = gBlendMap.Sample( samLinear, pin.Tex );

// Blend the layers on top of each other.

float4 texColor = c0;

texColor = lerp(texColor, c1, t.r);

texColor = lerp(texColor, c2, t.g);

texColor = lerp(texColor, c3, t.b);

texColor = lerp(texColor, c4, t.a);

//

// Lighting.

//

float4 litColor = texColor;

if( gLightCount > 0 )

{

// Start with a sum of zero.

float4 ambient = float4(0.0f, 0.0f, 0.0f, 0.0f);

float4 diffuse = float4(0.0f, 0.0f, 0.0f, 0.0f);

float4 spec = float4(0.0f, 0.0f, 0.0f, 0.0f);

// Sum the light contribution from each light source.

[unroll]

for(int i = 0; i < gLightCount; ++i)

{

float4 A, D, S;

ComputeDirectionalLight(gMaterial, gDirLights[i], normalW, toEye,

A, D, S);

ambient += A;

diffuse += D;

spec += S;

}

litColor = texColor*(ambient + diffuse) + spec;

}

//

// Fogging

//

if( gFogEnabled )

{

float fogLerp = saturate( (distToEye - gFogStart) / gFogRange );

// Blend the fog color and the lit color.

litColor = lerp(litColor, gFogColor, fogLerp);

}

return litColor;

}

We will implement six techniques for our Terrain effect: techniques using 1-3 lights, with or without fog. We will also need to generate a C# wrapper class for this effect; since this is not much different than the effect wrappers we have previously defined, I will omit the listing for it here, and refer you to the source on GitHub instead.

Terrain.Draw()

With our shader effect and C# wrapper complete, we can finally implement the drawing code for our Terrain class. Because the Terrain shader is specialized for the Terrain class, we will assume that it handles its own drawing state, in much the same way we did with our Sky class, for rendering skyboxes. The bulk of the work of the Draw function is devoted to ensuring that the device context is in the correct state, and that all of the Terrain effect’s shader constants are set, prior to submitting the quad patch vertex buffer to the GPU for drawing. Note that we need to change the DeviceContext’s PrimitiveTopology to PatchListWith4ControlPoints in order to submit our quad patches correctly, and that we need to set the InputLayout to our TerrainCP layout.

public void Draw(DeviceContext dc, Camera.CameraBase cam, DirectionalLight[] lights) {

dc.InputAssembler.PrimitiveTopology = PrimitiveTopology.PatchListWith4ControlPoints;

dc.InputAssembler.InputLayout = InputLayouts.TerrainCP;

var stride = Vertex.TerrainCP.Stride;

const int Offset = 0;

dc.InputAssembler.SetVertexBuffers(0, new VertexBufferBinding(_quadPatchVB, stride, Offset));

dc.InputAssembler.SetIndexBuffer(_quadPatchIB, Format.R16_UInt, 0);

var viewProj = cam.ViewProj;

var planes = cam.FrustumPlanes;

Effects.TerrainFX.SetViewProj(viewProj);

Effects.TerrainFX.SetEyePosW(cam.Position);

Effects.TerrainFX.SetDirLights(lights);

Effects.TerrainFX.SetFogColor(Color.Silver);

Effects.TerrainFX.SetFogStart(15.0f);

Effects.TerrainFX.SetFogRange(175.0f);

Effects.TerrainFX.SetMinDist(20.0f);

Effects.TerrainFX.SetMaxDist(500.0f);

Effects.TerrainFX.SetMinTess(0.0f);

Effects.TerrainFX.SetMaxTess(6.0f);

Effects.TerrainFX.SetTexelCellSpaceU(1.0f/_info.HeightMapWidth);

Effects.TerrainFX.SetTexelCellSpaceV(1.0f/_info.HeightMapHeight);

Effects.TerrainFX.SetWorldCellSpace(_info.CellSpacing);

Effects.TerrainFX.SetWorldFrustumPlanes(planes);

Effects.TerrainFX.SetLayerMapArray(_layerMapArraySRV);

Effects.TerrainFX.SetBlendMap(_blendMapSRV);

Effects.TerrainFX.SetHeightMap(_heightMapSRV);

Effects.TerrainFX.SetMaterial(_material);

var tech = Effects.TerrainFX.Light1Tech;

for (int p = 0; p < tech.Description.PassCount; p++) {

var pass = tech.GetPassByIndex(p);

pass.Apply(dc);

dc.DrawIndexed(_numPatchQuadFaces * 4, 0, 0);

}

dc.HullShader.Set(null);

dc.DomainShader.Set(null);

}

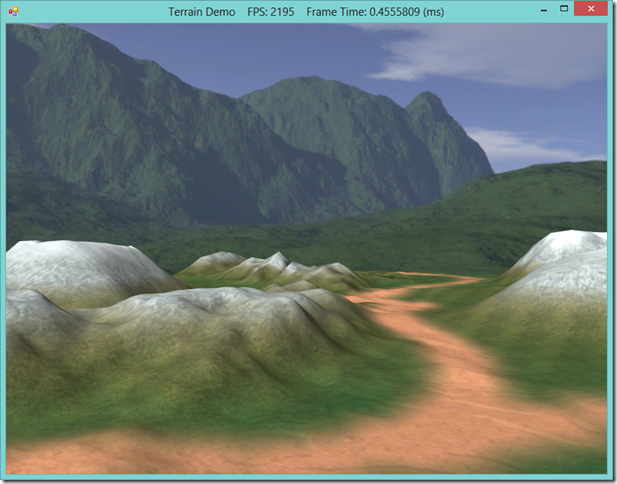

Final Results:

Next Time…

We’ll continue working with terrain, this time exploring some procedural techniques to generate height and blend maps, which will allow us to create random terrains. We will lean heavily on Chapter 4 of Carl Granberg’s Programming an RTS Game with Direct3D and the Perlin Noise-based solution presented there, and possibly some other techniques, if I have time to research and implement them.

For a one pixel sized heightmap your code will run into a division by zero exception.

ReplyDeleteGood catch. The error handling in these examples is pretty rudimentary; I've been focusing more on covering the material than hardening the code against bad input, for the moment.

ReplyDelete